CatDV Pegasus Worker

Manual Addendum

Copyright (C) Square Box Systems Ltd. 2017-2021. All rights reserved.

Square Box Systems Ltd

Lake View House,

Wilton Drive,

Warwick,

CV34 6RG

United Kingdom

Pegasus Worker

This addendum to the main CatDV Worker Node manual covers features specific to Pegasus Worker:

· The new Worker Node architecture, with separate processes for the Worker Service and Worker UI. (This affects all editions of the worker but is particularly relevant to Pegasus Worker.)

· Running the Worker Service as a background system service

· Managing a cluster of worker nodes on different machines using the Worker Monitor dashboard application

· The Pegasus Worker REST API

· Distributed load balancing (worker farming)

· Configuring a containerised cloud instance

· Cloud ingest actions

Worker Node Architecture

Starting with Worker 7 we changed the architecture of the CatDV Worker Node.

Previously, Worker 6 and earlier ran as a desktop user process, with the user interface and the worker engine running in the same process:

· the engine maintains the queue of tasks to execute and has threads to scan a watch folder and to perform server queries and to pick the next task to perform off the queue;

· the user interface displays the task list, has checkboxes to control adding items to the tasks (server query or file watcher threads) or take them off the list (worker threads), and has an editor to let you edit the worker configuration.

As of Worker 7 the user interface and the worker engine are now separate processes, though with a regular Enterprise Worker license in most cases they continue to behave in exactly the same way as Worker 6: when you launch the worker application this starts the UI process and (after a short delay) automatically starts the worker service, and when you quit the UI process that also quits the worker service.

WorkerUI

Worker Service

Task List

Worker Engine

File Watchers

Server Monitors

Worker Threads

SQL

XML

Config file

Log files

The difference is that it is now possible to run the worker service as a background system service, not just as a user session process, so it starts up automatically when the machine starts up and doesn’t require a user to log in and launch the worker. Additionally, it is possible now to remotely administer worker nodes running on another machine.

A major difference from older versions therefore is that the worker can now run in one of two distinct environments, in the logged on user session, or as a background system service:

Local session service

With a Workgroup or Restricted worker node license the worker continues to run as a desktop application within the logged in user session, the same way as it did in Worker 6.

Config files and log files are stored in the same place as before.

On Windows this is typically in:

· C:\Users\<user>\AppData\Local\Square Box\worker.xml

· C:\Users\<user>\AppData\Local\Square Box\Logs\CatDV Worker

(The exact location is based on the %LOCALAPPDATA%, %APPDATA%, or %USERPROFILE% environment variables).

On Mac OS X this is in

· /Users/<user>/Library/Application Support/worker.xml

· /Users/<user>/Library/Logs/Square Box/CatDV Worker

When running as a local session service file access permissions, ownership, mounted drives, and so on for the worker will be as per the user who runs the worker.

Background system service

New in Worker 7 is the ability to install the worker as a background system service so the worker engine runs as root (on Mac/Unix), or the Windows service user with administrator privileges (on Windows). From a technical viewpoint the worker service is installed and runs in exactly the same way as the CatDV Server except that the role of the control panel is now taken on by the worker UI.

When running as a background service the worker config files and log files are in different locations from the local session service.

On Windows they are typically in:

· C:\ProgramData\Square Box\CatDV Worker\worker.xml

· C:\ProgramData\Square Box\CatDV Worker\Logs

(This is based on the %ProgramData%, %PUBLIC%, or %ALLUSERSPROFILE%\Application Data environment variables.)

The CatDV Worker service is listed in the Windows Services control panel and controlled from there, just like the CatDV Server is.

On Mac OS X the config and log files are in:

· /Library/Application Support/CatDV Worker/worker.xml

· /Library/Logs/Square Box/CatDV Worker

The service is started from /Library/Application Support/CatDV Worker/Worker Service.app by /Library/LaunchDaemons/com.squarebox.catdv.worker.plist

On Linux they are in user (usually root’s) home directory and /var/log:

· /root/.catdv/worker.xml

· /var/log/catdvWorker

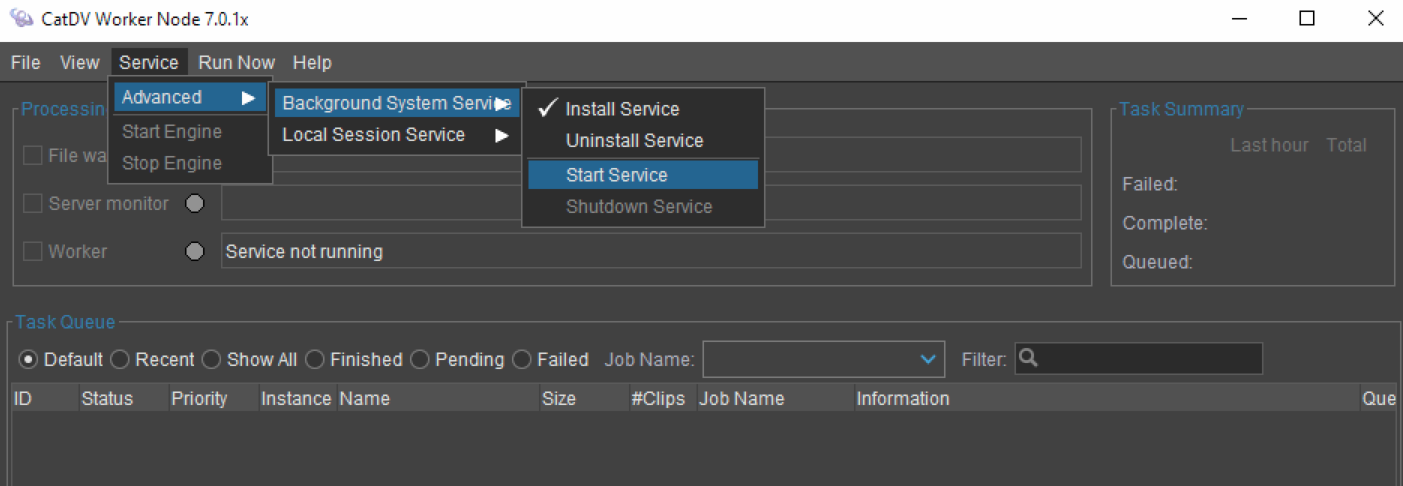

Installing the background service

Unlike the CatDV Server we don’t have a standalone installer to install or update the background service software. Instead, installing the service is done via commands in the “Service” menu in the worker UI.

On the Mac a copy of the worker software is installed in/Library/Application Support/CatDV Worker/Worker Service.app. It doesn’t use the one the worker UI was launched from as you could be running from a disk image or a copy of the software in your own home directory. The worker UI prompts you to authenticate when necessary in order to write to the system location and install the launch daemon.

On Windows the worker software is already installed in a fixed location (typically C:\Program Files\Square Box\CatDV Worker 7.0) and so it isn’t necessary to make a separate copy to run it as a background service. Instead, it just needs to register it as a Windows system service so it starts up automatically in the background. To do this, run the worker UI as administrator then do Service > Advanced > Background Service > Install.

If you’re unable to connect to the background service from another machine you may need to add C:\Program Files\Square Box\CatDV Worker 7.0\lib\JavaHelper.exe to the allowed programs in the Windows firewall settings.

Worker service and worker engine states

We have been using the terms “worker service” and “worker engine” more or less interchangeably. They refer to the same process but the distinction depends on what role they are performing.

Even though they run within the same process, there is a logical distinction between the worker “service” (which responds to RMI requests from the worker UI to display the task list and control the engine) and the worker “engine” (the file watcher, server query, and worker threads that do the work). It is possible to start, stop, and restart the worker engine (eg. after editing the worker config file), while the worker service is running throughout.

The worker can therefore be in a number of different states:

· Service not running

If the service process isn’t running you can’t see the task list or administer the worker from a remote machine. You can edit the local worker configuration and view the log files by directly accessing the local files but you need to use the worker UI to start the service before you can do anything useful.

If you are using the background worker service then you can’t edit the config unless the service is running.

· Service running but engine not started

Once the service is running you can see the task list and administer the worker remotely, but the engine won’t start processing tasks until you start the file watcher, server monitor, and worker threads.

In the general section of the worker configuration you can specify whether to start the engine automatically as soon as the service starts or wait until you press the Start button.

You can also get back to this state (service running but engine stopped) once the engine has been running by pressing the Stop button and waiting for the threads to shut down.

· Engine running normally

Once the engine threads start up they start doing their work and you should see three green lights in normal operation.

· Service and engine running but threads suspended

If a fatal error occurs, or if you manually uncheck any of the worker control checkboxes, then the service and engine will both be running but the worker threads are paused until you enable them again. You can do this using the local worker UI or remotely from the Pegasus worker monitor.

Once started the worker service normally keeps running indefinitely, as that is the only way to access the worker from another machine.

If the worker service is shut down or dies for any reason then you will normally need to launch the local worker UI process to start it up again, though if it is installed as a background system service it will normally start up automatically when the system reboots (without requiring a user to log in and start the service manually).

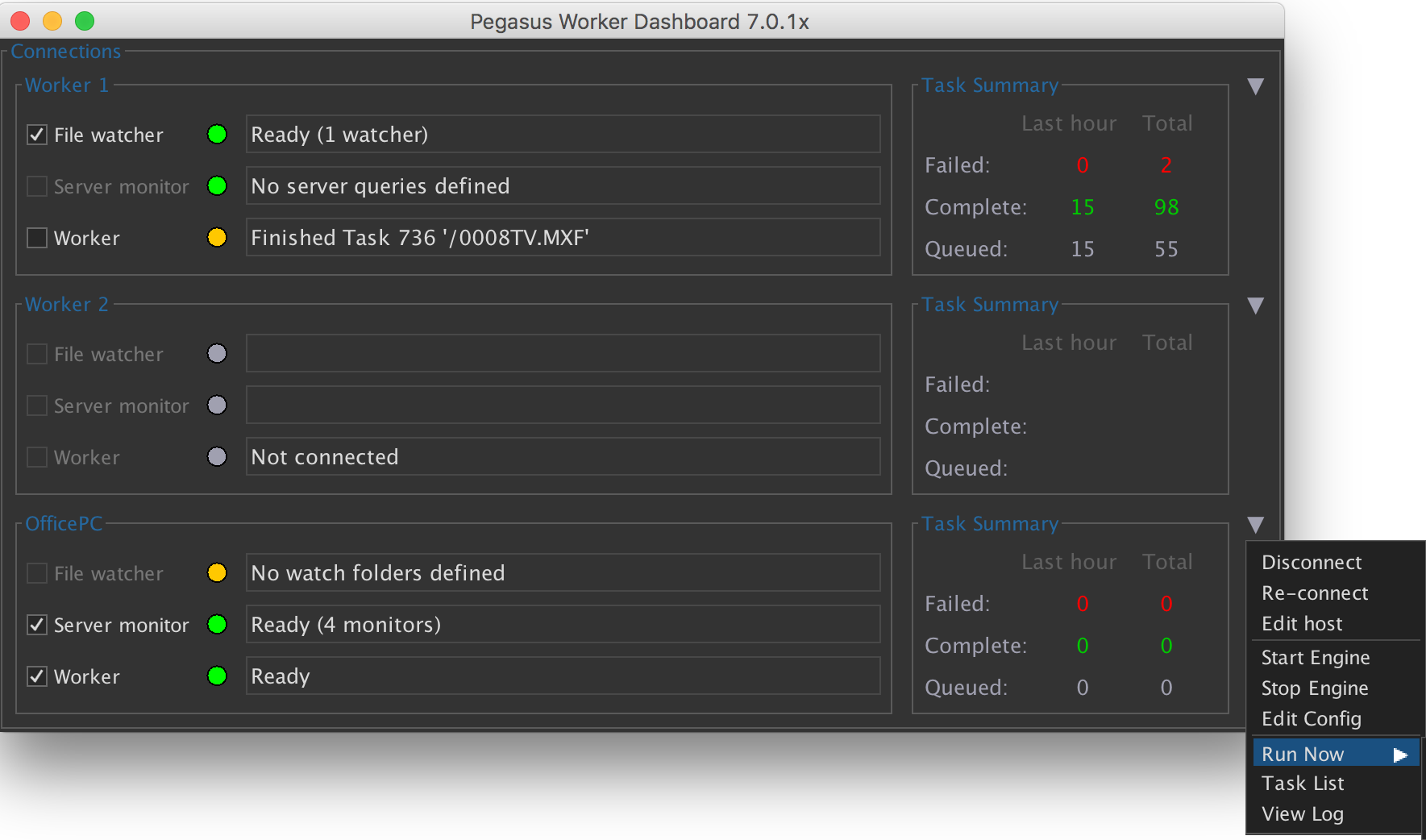

Pegasus Worker Monitor

To control Pegasus Worker instances running on other machines there is a new dashboard application, the “Worker Monitor”, that only communicates via the RMI interface, and therefore needs the worker service to be running on the remote machine.

Apart from not being able to do the initial install of the background service, or start it up if the service isn’t running, the Worker Monitor provides full remote access and management of the remote Pegasus Worker engine, including:

· Display and edit the task list (delete old tasks, resubmit tasks, change priority)

· Start and stop the engine

· View and control the traffic light status of the different worker threads

· Viewing the log files (from the remote machine)

· Manually run a particular watch action now

· Editing the worker configuration (including seeing worker extensions, file choosers, and user defined fields from the point of view of the remote machine, not the local one the dashboard is running on)

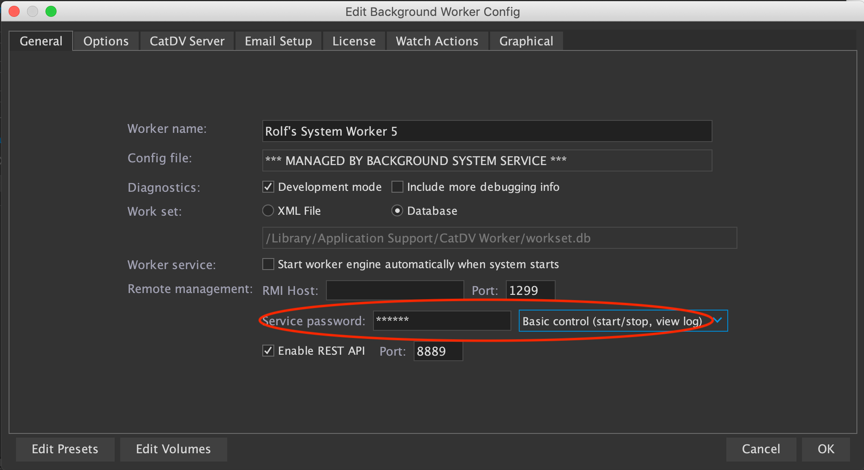

Allowing anyone with network access to remotely manage the worker node like this is an obvious security weakness and so to allow remote access it is necessary to set up a password on the worker engine. This is separate from the CatDV user name and password that the worker uses to connect to the CatDV Server and is purely there to protect the worker configuration from remote access.

To permit remote management of the worker you therefore first need to use the local worker UI app to enter a password for that worker instance, and choose what level of remote access to give:

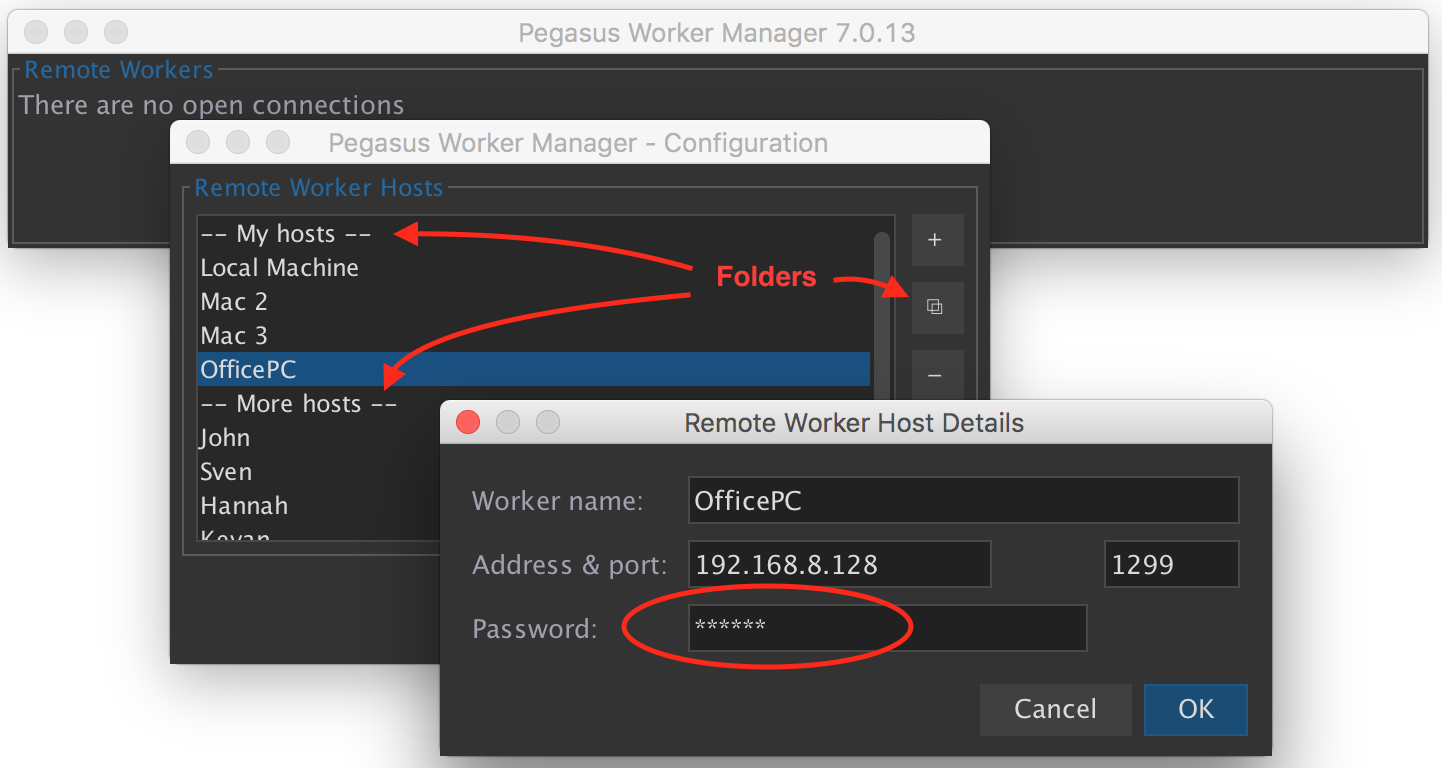

You then configure the Worker Monitor app to add the IP address or hostname of that worker instance, and enter the password here.

For convenience, you can organise the hosts you administer into different folders by using the button to insert a folder divider.

Note that you will need a Pegasus worker license for each machine that is to be administered remotely.

In the Worker Monitor dashboard you can either connect to all the hosts you have defined, to a folder of hosts, or individually to specific ones.

You can then see the status of all the connected workers at a glance, and administer individual worker installations by right clicking to show the pop up menu of available commands. You can also double click on the count of failed or completed tasks to view those tasks.

Local worker UI

The new Worker Monitor dashboard is specifically designed to administer Pegasus Workers running on other machines and only ever accesses the task list, worker config file, or log files using RMI requests to the service.

By contrast, the regular worker UI uses a mix of direct file access and RMI requests to connect to a worker service on the local machine:

· It views the task list by going via the worker service (so if the service isn’t running the task list will be blanked out).

· It views log files by directly accessing the file system (allowing you to diagnose problems starting the service, or a failure to connect to the service).

· Editing the worker configuration will either go through the worker service (if it is running) or by directly editing the worker.xml file.

If you are using the background system service then the only way to edit the config file is to go via the service (as the config file is owned by the administrator/root user and the worker UI will normally be running as a normal user space process and so won’t have access to the config file).

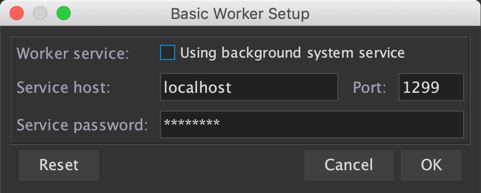

Basic setup of the worker UI

Use the File > Basic Setup command to tell the worker UI whether you are using the background service or not, and which port to connect to the service on:

Enter the password that the worker service is configured with.

This connection information is stored separately from the main configuration file in the user’s local workerUI preferences file:

· C:\Users\<user>\AppData\Local\Square Box\workerUI.prefs (Windows)

· /Users/<user>/Library/Application Support/workerUI.prefs (Mac)

Things to watch out for

Because the background service and remote monitoring differs from how the worker used to work there are a number of gotchas and things to be aware of.

Be very clear whether you are using the system background service or the local session service, and to minimise confusion avoid switching between them too much – perhaps even delete the log files and configuration from the other environment. (If you’re not careful then depending on how you launch the UI and whether the service is running or not it’s conceivable that you might see the task list from one environment and old log files from the other, for example.)

It’s highly recommended that you don’t ever change the port from 1299. It’s confusing enough that you can have the worker running from two different locations and under two different user accounts, but having a fixed port number at least ensures that only one or other of the instances can be running at a time!

To install the background system service you need to authenticate as Administrator. Under Windows, right click on the application and choose Run As Administrator. On OS X launch the UI as normal and use the Service menu to install the background service and you will be prompted to enter an administrator password.

The worker UI communicates with the worker service using Java RMI, in exactly the same way as the CatDV desktop app talks to the CatDV Server. Similar issues apply therefore, in that ideally you need to tell the server (ie. the worker service) what IP address clients (ie. the Pegasus Worker dashboard running on another machine) will use to access the server. This is less critical now however as we use the same brute force method to rewrite the IP address of the remote server object as we started doing in the client, so in most cases it should work even if you leave the host address blank in the worker config.

There are bootstrapping issues and contradictory requirements which complicate the situation. For example, if you’re using the local service it is necessary to be able to edit the config file before the service is running, in order to enter initial license codes, port numbers, etc. But if you’re using the background service you might not have write access to the config file directory other than via the service, so you need to install and start the background service first before you’re able to edit the configuration!

When you first install the background service it won’t have its own config file and so when you edit the background service configuration for the first time it will import any settings and watch actions from the local configuration (including the location of the workset file, so you may want to change that). You can only see and edit the background system service config file when the background service is running, so take care not to use the worker UI to try and edit the config when the service is not running otherwise you may get confused because you see the old contents of the original local config file.

Licensing requirements

To run as a background system service you need a Pegasus Worker or Enterprise Worker license (not Workgroup Worker or Restricted Worker)

You don’t need a special license code to run the Worker Monitor application but you can only connect to Worker Nodes on remote machines if they have a Pegasus Worker license. (If you’d like to test the Worker Monitor you can use it to connect to a worker on the local machine even if it doesn’t have a Pegasus Worker license.)

Pegasus Worker has other additional features, most notably it supports worker farming using the distributed load balancing option, where several workers on different machines can coordinate their work and only pull one item at a time to work on from the queue on the CatDV Server.

REST API

If you have a Pegasus Worker license you can use the new REST API to remotely monitor the status of the worker, query the task list, and even remotely submit jobs for processing. The REST API is designed for use by the CatDV pro services team or by third party systems integrators. It is provided as an alternative remote monitoring tool to the Worker Manager app. Both remote monitoring technologies require a Pegasus License and you can use either or both depending on your requirements.

The REST API is disabled by default. To use it you need to enable the REST service by checking the option at the bottom of the General tab of the worker config editor, and also select the port to use.

The following request endpoints are available in the REST API:

GET http://localhost:8889/info

Display basic version information about the worker to confirm the REST service is running.

{"status":"OK", "data":{"version":"CatDV Worker Node 8.0b6"}}

GET http://localhost:8889/status

Display the current status of the worker:

{"status":"OK", "data":{"status":{"seqNum":75, "systemService":false, "running":false, "shuttingDown":false, "message":"Engine stopped", "enableFileChk":false, "fileChk":false, "fileColor":{}, "fileMsg":null, "enableServerChk":false, "serverChk":false, "serverColor":{}, "serverMsg":null, "enableWorkChk":false, "workerChk":false, "workerColor":{}, "workerMsg":"Engine stopped"}, "summary":{"message":" Total 766 tasks (713 Queued, 1 Failed, 51 Complete, 1 Skipped), 9 batches", "numComplete":1, "totalComplete":51, "numFailed":0, "totalFailed":1, "numOffline":0, "totalOffline":0, "recentlyQueued":0, "totalQueued":713}}}

You can also display it formatted as a summary message using http://localhost:8889/status/summary

{"status":"OK", "data":"Complete: 1 / 51\nFailed: 0 / 1\nOffline: 0 / 0\nQueued: 0 / 713\n Total 766 tasks (713 Queued, 1 Failed, 51 Complete, 1 Skipped), 9 batches"}

GET http://localhost:8889/tasks

Without any other parameters the tasks endpoint returns the default list of tasks. If you provide the address of a specific task it will return details for that task, eg. http://localhost:8889/tasks/606 :

{"status":"OK", "data":{"id":606, "process":1, "status":4, "priority":2, "instance":"Rolf-Touchbar.local-20190109-234657-0", "bookmark":"Helper1 20190110-0827.log:127", "filesize":194389, "name":"TestSequences.cdv", "mtime":{}, "lastSeen":{}, "root":"/Users/rolf/Media/UnitTestData", "filename":"/TestSequences.cdv", "numClips":1, "jobname":"Watch UnitTestData", "batchID":null, "online":null, "remoteClipId":117478, "remoteClipSeq":0, "queued":{}, "lastUpdated":{}, "details":"Import as Generic File, resulting in one clip, type Other (non-media file), duration 0:00:00.0\nPublished to 'Auto Publish 2019.01.10 08:27'\nRemote clip ID 117478\nTask took 3.8s"}}

You can filter the task list looking for all the tasks relating to a particular job, containing text in the filepath or details description, or belonging to a particular batch of tasks submitted in one go.

http://localhost:8889/tasks?filter=xxx http://localhost:8889/tasks?jobname=xxx http://localhost:8889/tasks?batch=nnn

PUT http://localhost:8889/tasks

You can change properties of a queued task by submitting a PUT request with a JSON payload containing the following properties:

action: ‘resubmit’, ‘hold’, ’resume’ or ’update-priority’

taskIDs: <array of task IDs that the action applies to>

The update priority call requires an additional parameter:

priority: <numerical value 0=lowest – 4=highest>

DELETE http://localhost:8889/tasks

The delete action deletes the tasks whose IDs are specified in the taskIDs property of the JSON payload.

POST http://localhost:8889/submit

Finally, a remote system can directly submit server query or watch folder tasks to the worker task list or queue a scheduled job immediately. In each case, you need to specify the job name, and either the server clip id or file path as appropriate.

The job you pass in can be disabled from normal running so it only runs if explicitly submitted via the REST API.

To allow tasks to be submitted remotely via the REST API you need to enable those job definitions that are intended for remote use by adding an allow.submit line to the Advanced Properties in the worker config, for example allow.submit=Job Name 1,Job Name 2

You should POST a JSON object detailing the files or clips to queue, for example

{jobName:"Upload files", files:[ "/Media/File1.mov", "/Media/File2.mov" ]}

{jobName:"Process clips", clipIDs:[ 12345, 12346 ]}

{jobName:"Upload files", file:"/Media/File3.mov"}

{jobName:"Process clips", clipID:12347, userID:151}

{jobName:"Scheduled job"}

In each case it will return a batchID that you can use to get the latest status of those tasks

{"status":"OK", "data":{"batchID":1283}}

For convenience of testing you can also submit a task using GET but this isn’t recommended in normal use because the parameters are visible in the URL and you can’t pass a list of files or clipIDs.

You can associate a task with a specific userID if known.

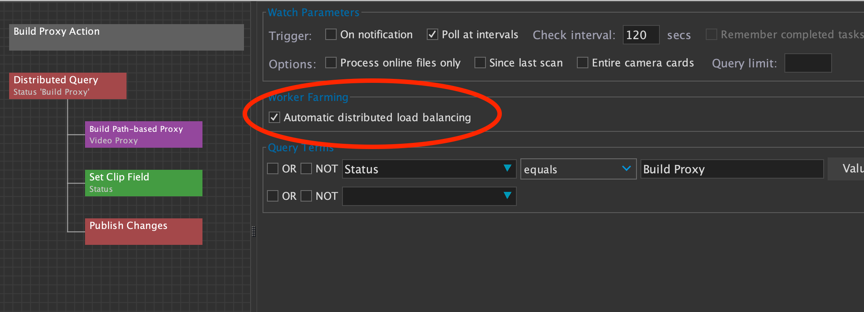

Worker farming

With a Pegasus Worker license you can have several worker instances in a cluster work cooperatively on the same list of jobs.

Rather than using the task list, which is local to each running instance, the database on the CatDV Server is used to coordinate the different instances.

On your server query panel check the “Automatic distributed load balancing” option:

If this option is selected then when the server query runs, rather than “grabbing” all the matching clips that need to be processed and adding them all to its task list in one go, the worker will only grab one at a time and will mark it as “being processed” on this instance. If you have several workers all running the same query against the server they co-operate to ensure that only one worker instance can grab each clip. If a clip is already being processed on one instance and this worker has spare capacity it will automatically move down the list and take the next clip that is eligible to be worked on. In this way multiple Pegasus Workers will automatically divide up the work between them with no risk of conflicts because two are trying to update the same clip at the same time.

This process is managed automatically by all the workers running the same query (and same job name) that have the worker farming option enabled. You can see which worker is currently working on any particular clip by looking the “Worker Farming Status” (squarebox.worker.farming.status) clip field. In rate situations you might want to reset that field yourself. For example, if a job fails on one particular clip (perhaps the file is in a format that your transcode preset doesn’t support) then there is no point repeatedly processing the same file over and over again and failing each time, so once it has failed on one worker node the clip won’t be looked at again for 24 hours unless you clear that field.

It isn’t necessary for all the worker node instances in a cluster to have all exactly the same watch definitions as each other. For example, one instance might be there to handle ingest (indexing of watch folders) and another performs archiving, but they both have one common watch action that uses the worker farming option to do transcoding as a low priority background activity.

Containerised cloud deployment

If you use a containerised environment such as Docker you can store the worker configuration on a CatDV Server. This makes it easy to start up a new worker instance as you simply have to specify a few environment variables which allow the worker to connect to your server from which it will load its configuration. (Please note that the task list is still stored in local storage on that container instance, so if you terminate it and then re-create it, it will start again with a blank task list).

You use the Pegasus Worker Manager dashboard to edit cloud configurations.

First, define the CatDV Server(s) you are using by entering hostname (or http url), user name and password in File > Cloud Servers, then do File > Cloud Configs to edit the worker configurations saved on that server. (It's done this way because you might have several servers and worker installations you're trying to manage). Use + and - to add or delete configurations, or the left arrow to import an existing worker.xml config file. You can save several configurations, eg. 'Ingest Node' and 'Transcode Node'. You'll get the regular worker config editor to edit these.

To tell a worker instance to use one of the cloud configs you need to set several environment variables when you deploy the instance:

SERVERHOST | host name or url of the server (must be specified) |

SERVERUSER | user name to connect (defaults to Administrator) |

SERVERPASSWORD | password to connect (defaults to blank) |

CONFIGNAME | which cloud configuration to use if there are more than one (may be left blank if there's just one on the server) |

WORKERID | unique name of this instance (must be specified) |

REGUSER | worker license details (defaults to the license specified in the cloud config file) |

REGCODE | worker license details (defaults to the license specified in the cloud config file) |

SERVICEPASSWORD | the password used to enable remote management of the worker instance (defaults to the one specified in the cloud config file) |

SERVICEPORT | the port on which the worker service will listen for remote management REST connections (defaults to 8889) |

Cloud ingest actions

You can monitor an S3 bucket or other remote volume such as an FTP site and sync files there with a catalog in the same way as a quick folder scan works.

First, you need to define one or more “remote volumes”, including the type of file system and credentials (URL, user id, and password) to access this. Depending on the type of file system (curl, Amazon S3, Backblaze etc.) the URL might be referred to as a bucket or endpoint, the user id might be referred to as a key or access key, and the password as a secret key or application key.

Give each remote volume a unique identifier. This is shown using a special square bracket notation within media file paths and tells the worker how to access the remote file.

You are now ready define a cloud ingest action:

This will result in clips in your catalog whose media file path use this notation, eg. [SupportFTP]/pub/upload/file1.mov. These won’t be directly playable in the desktop but you can define media path mappings or media stores that map from of these remote volumes to a local proxy volume. Additionally, with CatDV Server 8.1 you can define remote volumes and so it can directly download or play such files.

At this point the clips haven’t yet been analysed, so you would normally follow this by a server-triggered watch action to perform media analysis and build a proxy:

The ‘download remote files’ option tells the worker to download a copy of the file to a temporary cache on local storage so it can process the file if it encounters a remote file with square brackets. Don’t forget to set a failure action to prevent the same file being processed again if there is an error.

To avoid egress fees you may be able to run a cloud worker instance in the same cloud environment as your storage. Please check with your cloud provider for their specific pricing structure

JavaScript filters

It is possible to customise file triggered watch definitions so that JavaScript is used at the time the trigger itself runs (ie. before a job is queued and runs) to determine which folders are scanned and whether a particular file should be processed. It is even possible to dynamically specify the list of files to be queued without scanning a physical directory at all.

To do this, add JavaScript Filter from the special actions section and define one or more of the following functions:

expandRoots(root)

Return an array of root watch folders to scan. The watch folder that this watch action was originally configured with is passed in for reference but in most cases isn’t used.

acceptsFile(file)

The file path of a file that was found in one of the watch folders (and has passed other checks such as making sure the file is no longer growing and matches any file conditions such as an include or exclude regex) is passed in for validation. Return true or 1 to accept the file.

filterFiles(files)

If you need to filter the whole set of files that are found in one go (perhaps because you’re processing a complex camera card hierarchy or similar) you can filter the entire collection and remove or even add files as required. An array of file paths is passed in and returned.

generateFiles()

For complete control you can explicitly specify a set of file paths (as an array of strings) which the watch folder file trigger should return. This can even include remote file paths which don’t exist on this system.

For example, you could have a script such as

function expandRoots(oldRoot)

{

var paths = []; var reply = CatDV.callRESTAPI("catalogs",

{"query":"((catalog[is.new])eq(true))"}) reply.data.forEach(function(catalog) { paths.push(catalog.fields["watch.path"]); }); return paths;

}