CatDV Worker Node 9.1

(incl. Pegasus Worker)

User Manual

Copyright (C) Square Box Systems Ltd. 2006-2022. All rights reserved.

Square Box Systems Ltd

The Lightbox Willoughby Rd Bracknell RG12 8FB United Kingdom

Restructured Documentation

The Worker Node documentation has now been restructured into separate release notes (updated with each point release) and user manual (this document, generally updated with major version updates only). The old Pegasus Worker addendum has also been merged into this user manual.

Please refer to WorkerReleaseNotes.html for a detailed list of changes in each release.

New Features in Worker 9.1

Worker 9.1 builds on Worker 9 and adds the following:

· Significant improvements to Avid AVB bin reader and AAF exporter so that virtually any file that can be imported or AMA-linked in Media Composer, including complex clips and image sequences, can be brought into CatDV and logged there, then re-exported to Avid where it will play and link to the the original clip (and come over with markers, log notes, subclips, and cuts-only sequences intact).

· A new DNx OpAtom MXF exporter (requires Pegasus Worker) allows raw camera media to be ingested into CatDV first and then exported as a DNxHD proxy to the Avid MediaFiles folder, including burnt in text or timecode if desired. Special AvidProxy metadata fields on the original clip describe the exported files, so when the clip is exported from CatDV via AAF Media Composer will automatically link to and play the proxy (and you can subsequently Re-link to the original hi-res in Avid later, as required).

· A new Image Sequence exporter allows movies to be transcoded to a sequence of consecutively numbered images in most common formats including JPG, EXR, DPX, PNG, TIF etc. (again, including burnt-in text if required).

· Improved codec support, including support for playing and transcoding CinemaDNG image sequences, updated FFmpeg, RED, BRAW and Avid libraries, and better GPU support.

· A new option to control whether any worker processes are held in reserve for urgent high priority tasks or not.

More generally, Worker 9 extends Worker 8.1’s focus on cloud workflows and allows worker processes to run as separate processes from the main worker engine and task list, potentially even on different machines in future.

A key technical difference between Worker 8 and 9 is that Worker 9 is built on the CatDV 14 codebase, which uses OpenJDK 11 and is fully 64-bit only, which means that it can be notarised to run on Mac OS Catalina and later.

Other significant changes include:

· Support for the new clip types such as playlists and version sets introduced in CatDV 14. Lightweight image sequences represent an entire directory containing an image sequence as a single clip rather than a metaclip and are and enabled by setting imageseq.lite=true. Similarly, lightweight directory clips represent a Mac package or bundle directory as a single clip rather than listing all the files within it. (By default ‘.app’, ‘.fcpbundle’ and ‘.framework’ are treated this way but you can override this list by setting package.exts).

· Quick folder scan has the ability to detect when an existing file has been moved and to update the existing clip record, and move any existing proxies, rather than creating a new clip. Similarly, it can detect when a file has been deleted, and automatically move clips to the ‘Deleted Files’ catalog

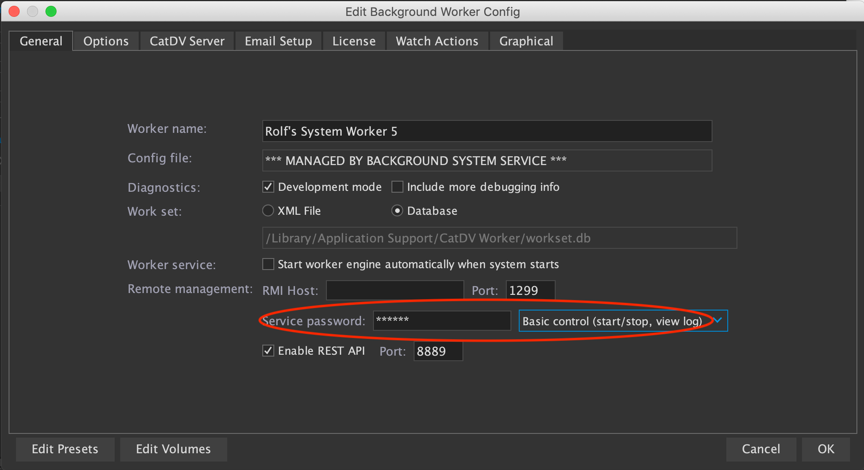

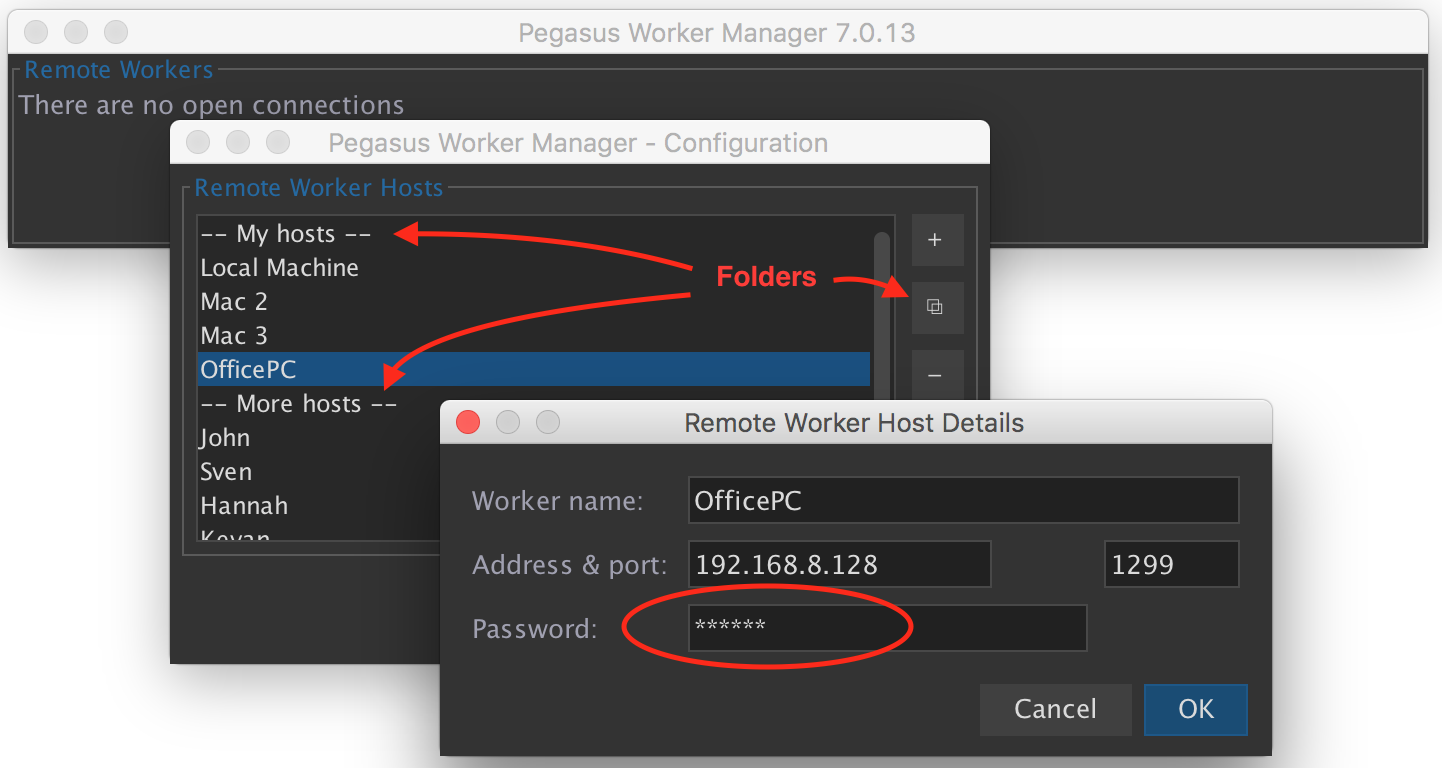

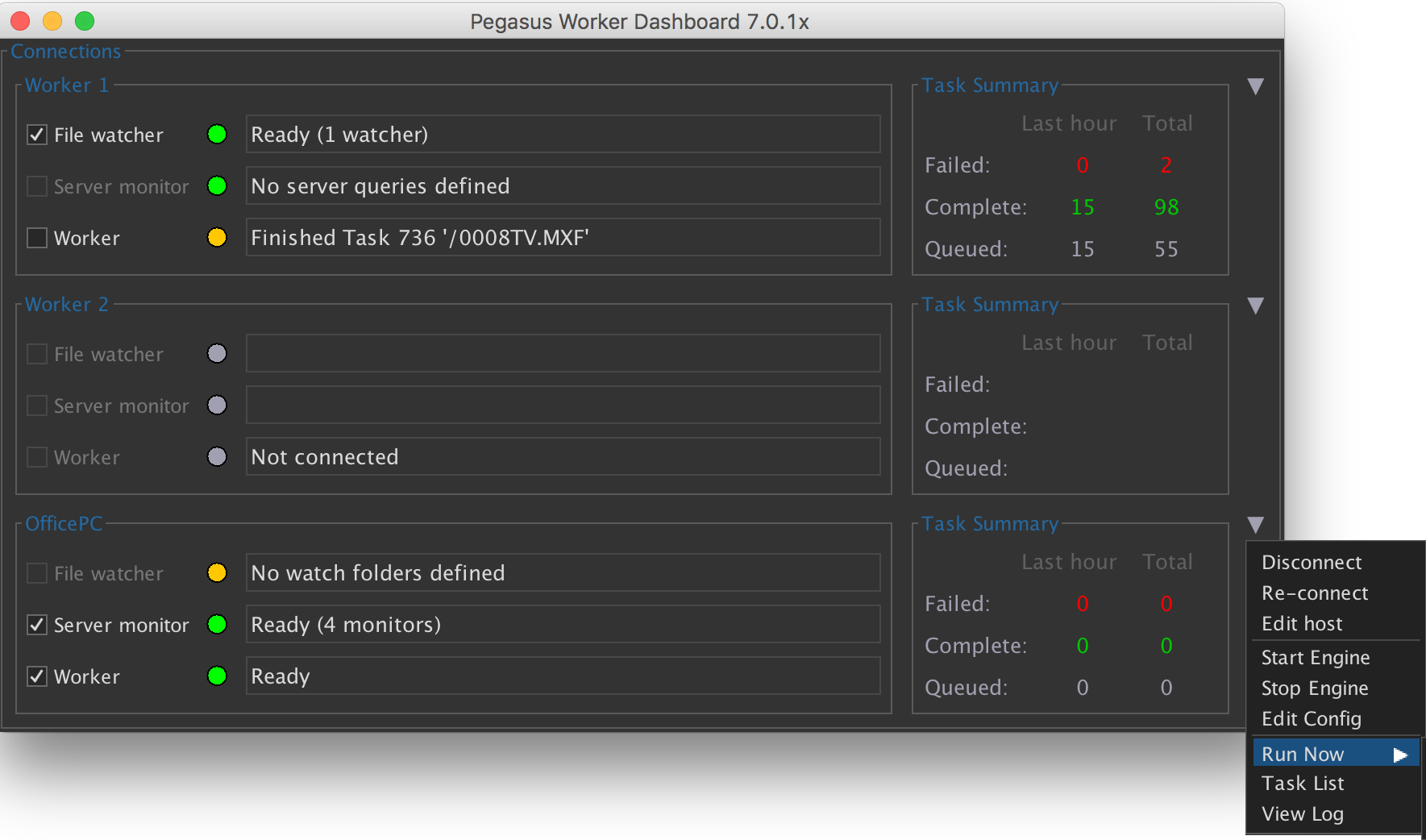

· Changes to make it easier to deploy the Worker in containers and VMs, including automatically registering with the server (for use in the Web Admin Worker Dashboard), allowing remote administration of a single worker without requiring a Pegasus Worker license, and improvements to the Pegasus Worker Manager so by default it gets its list of workers to administer when you connect to the server. Remote worker administration is now controlled by which the role of the CatDV user making the change.

· When monitoring a Quantum StorNext volume the Linux Worker will use metadata archive change notifcations so that a quick folder scan can more efficiently scan the volume and only process files and directories that have changed without needing to scan the entire directory tree. It still polls the metadata controller to ask it which files have changed but this is much more efficient and means the poll interval can be reduced (eg. to 10s).

· Usability improvents, including the ability to edit watch actions without pausing the threads while the worker is running, the ability to group related watch actions into sections and show dependencies between actions in the new graphical overview panel, and a new button to temporarily disable all watch actions other than the one you are working on and then restore the previous state when you have finished testing.

· Other changes such as adding Canon RAW and Avid DNx codecs, and loading metadata extraction naming rules from the server if they are defined there.

New features in Worker 8.1 and earlier

Worker 8.1 is a major update to the worker with a particular emphasis on cloud workflows with the following main changes:

· The ability to define “remote volumes” (with CURL, Amazon S3, and Backblaze B2 implementations) and then set up cloud ingest watch actions. These are similar to quick folder scan actions and monitor a remote volume or bucket to create clips that refer to a remote location by using a special media path that uses square brackets for the remote volume. You can then follow this with watch actions to analyse the media and build a proxy and it will automatically download the remote file to a temporary cache as required. (Pegasus Worker only)

· Remote volumes (file paths using square bracket notation) can also be specified as the destination for file copy or move operations and as the destination of a ‘build proxy’ step. (With CatDV Server 9.0 you can also specify remote volumes and use these in your media stores, including as the location of proxies and hi-res files for download).

· Support for containerisation. Using the Pegasus Worker Manager you can store worker “cloud” configurations in the CatDV Server database, and then to start up a worker instance you just specify environment variables that tell it how to connect to the server to fetch its configuration from the server (as instances don’t maintain persistent local state when they’re not running). The Linux worker is available as a prebuilt Docker container. (Pegasus Worker only)

· Use of web sockets and RMI over HTTP to simplify communication between the worker and server, and between the worker manager and the worker service, by only using standard HTTP ports rather than having to open up the network to allow RMI

· The Linux worker now includes the native helper process to support decoding of RED, ProRes, ARRI, and other formats.

· Other changes include adding Blackmagic BRAW support, adding multi-line conditional steps, and Javascript failAndRetry and submit() methods.

These are in addition to features introduced earlier in Worker 8.0:

· The native helper process has moved to 64-bit, so QuickTime (which has been deprecated by Apple) is no longer supported and legacy dependencies on QuickTime have been removed. The NativeQT exporter is no longer available and transcode jobs should be migrated to use the Advaced Exporter instead.

· The Advanced Exporter has been significantly enhanced, including adding support for using native AVFoundation or Media Foundation exporters, ability to generate Apple ProRes files (including 4444XQ) on MacOS, and support for creating special audio waveform movie or film strip image files.

· Pegasus Worker integrates with the third party Tin Man Studio application from Calibrated to allow export of OpAtom DNxHD files for use in Avid Media Composer.

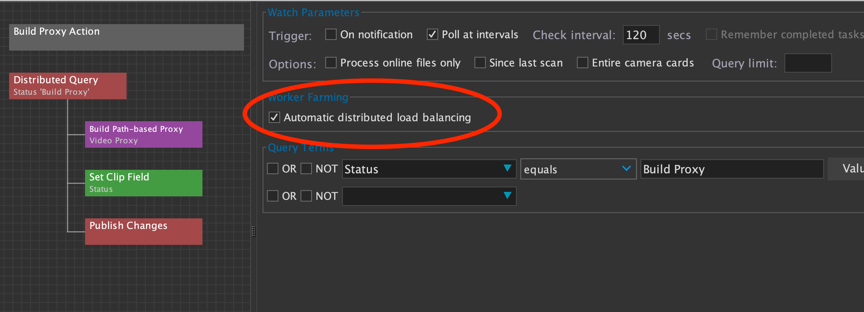

· For improved performance server-queries can be triggered when a notification is received from the server that a clip has changed rather than by regularly polling the server. If you check only the “Trigger on notification” checkbox then if any field that is used in the trigger changes on the server then the query is run immediately (though not more often than the check interval). If you check both options then the query runs regularly at the poll interval but if a notification arrives it brings the time forward and runs it immediately.

· Similarly, if you allow file uploads through the CatDV Web Interface, and have a worker watch folder to process incoming files, then the worker action can be triggered immediately by a notification from the server rather than having to poll the watch folder.

· Bulk queries that move clips to another catalog are much more efficient (by not opening up the destination catalog before appending to it, and by not unnecessarily refreshing the catalog from the server after publishing changes).

· Group processing steps like sending final email or performing a command on completion of other processing steps under a new “Completion actions” section.

· Add a new “failure action” processing step that is triggered if a task fails. You can run a JavaScript and/or set a clip field when an error occurs, for example to say that there was a problem creating a proxy for that clip. (Depending on what the error is the failure action isn’t always guaranteed to run of course. For example, if the connection to the server went down then you won’t be able to set a clip status and publish changes!)

· Add a new “Apply Metadata Rules” processing step

· Add a new $! variable that displays the last error message, and $# that displays the current value of the task summary.

· If you have a Pegasus Worker license there is a new REST API that lets you remotely monitor the worker and submit jobs.

· When in development mode there is a new option to resume running normally (for the duration of this session) without having to edit the config to turn development mode on and off

· Support for loading path mappings from media stores stored on the server, avoiding the need to repeatedly define the same path mappings eg. from Mac to Windows paths or vice versa on each watch action. This applies both to path mappings to locate original media files and to locate Avid Media Files by UMID. You can still specify custom path mappings for each watch action and these will be checked before those from the server media stores. Note that when building proxies currently you still need to specify the destination root folder because there might be multiple potential matches and the worker needs to know where to put the proxies.

Worker 8 builds on the following major changes that were added in Worker 7.0:

· Worker 7.0 is built on the CatDV 12 code base and includes improvements to the core engine in CatDV 12, including better metadata extraction (from MPEG, JPEG, WAV and MOV files), an advanced FFmpeg-based exporter, support for automatically creating a .jpg proxy when building proxies from a still image, ability to use syntax like clip.name or media['FNumber'] in variable expressions, support for Sony XAVC-S and Canon XF-AVC metaclips, improved AAF and FCPXML support, ability to create audio waveform thumbnails from audio files, and support for XMLv2 and SRT sidecar files.

· Like CatDV 12, Worker 7.0 features a more modern flatter look and feel, and native support for the CatDV Server 7 metadata schema.

· Worker 7.0 is able to run without QuickTime being installed. If QuickTime is available it will be used, as it provides access to Apple ProRes and third party codecs such as from Avid and Calibrated, but if it isn’t installed the Worker can still process most files using its build in media file analysis and metadata extraction routines and transcoding capabilities provided by FFmpeg.

· As a result of reducing the worker’s dependency on QuickTime we are now able to offer a version of the worker that can run under various versions of Linux (to enable deployment on the cloud for example).

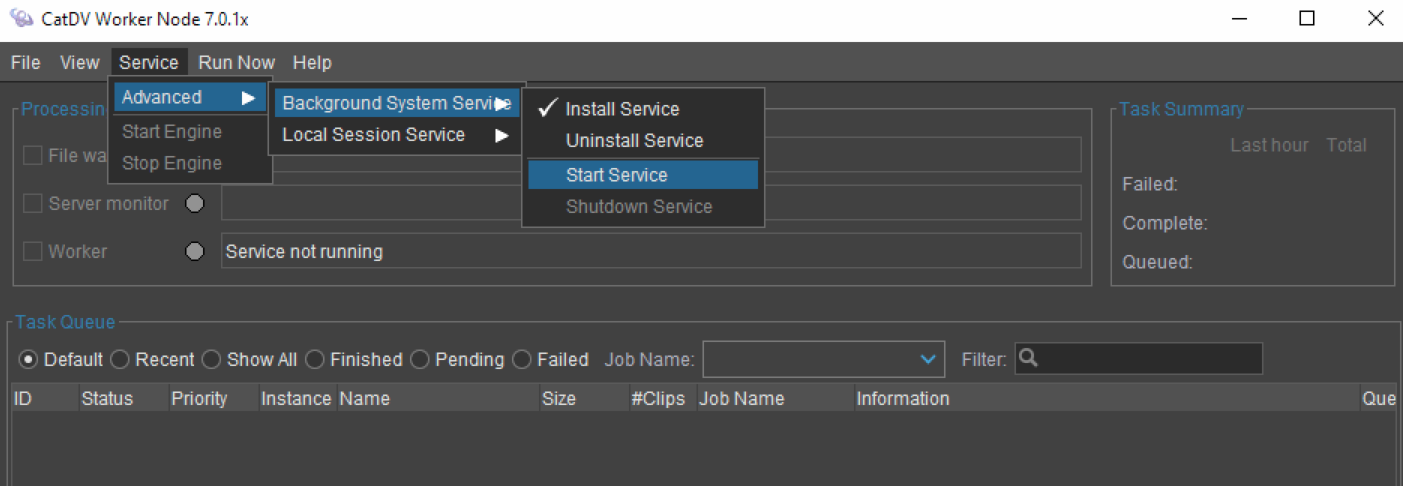

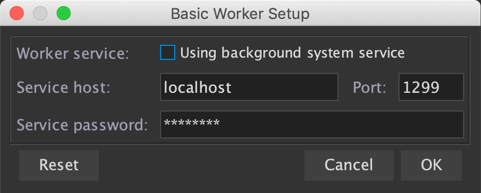

· The worker engine now runs as a separate process from the user interface. There are new buttons to explicitly start and stop the worker running. Editing the configuration no longer has to immediately apply the changes and restart the worker and it is possible to quit the worker user interface (i.e. the config editor and status/task list monitoring application) while leaving the core worker engine itself running as a background process.

· There is the option to store the task list using an embedded database rather than using the old xml file based format as this is more efficient and permits faster throughput.

· There is a new graphical configuration editor that displays a visual overview of all the watch definitions and processing steps. Different colours indicate the type of processing step, and “stacked” nodes indicate where a single watch action such as a server query can queue multiple separate tasks. Double click a node to edit the watch definition, then drag and drop available processing steps across to edit the watch action.

· The worker main window includes a new task summary panel that shows how many tasks completed or failed, without needing to keep the task list panel open to to monitor failed failed tasks. Additionally, it is possible to have multiple task list panels open with different task filters, and to undock or remove the task list from the main window.

· Enterprise and Pegasus Worker supports a new Execute Javascript processing step, and also supports plugin extensions (for example a File Path Cleaner or Upload To YouTube processing step) that can be purchased from our market place.

· It is possible to disable watch actions so they don’t run automatically, but instead run them manually from the Run Now menu, or with the new Run Watch Action processing step. This allows one watch action to trigger another watch action immediately without requiring polling.

· Better control over task priorities (lowest priority watch actions will only run and queue new tasks when the system is idle, and if you have three or more worker processes then at least one process is reserved for normal and high priority tasks only).

· New importer for Avid AVB bin files, including an AVB Folder Sync importer that watches a folder for new or modified bin files and only imports new clips.

· New Segment Exporter (based on FFmpeg) that automatically splits a transcode up into separate segments and then concatenates them. This allows things like spanned metaclips and complex sequences including metaclips to be transcoded, and means event marker text can be burnt in. It also allows exported movies to be preceded with an optional title slate, and provides more consistent handling of audio when transcoding files with different numbers of audio tracks and channels of audio per track, including a new option to mix down all the input audio tracks to a single output track.

· Ability to use cuvid and nvenc hardware acceleration if you have a Windows PC and a modern NVIDIA graphics card and are transcoding to H.264 or HEVC/H.265.

· Enterprise and Pegasus Worker can be installed as a background service that is started automatically when the system boots up, rather than having to be started manually in a logged in user session.

· If you have a Pegasus Worker license there is a new option on the server query panel to queue one task at a time to support worker farming. When the query runs, rather than queuing all the matching clips it will just queue the first one, and atomically update the status of the clip to say it has been queued. This allows workers running on different machines to fetch one task at a time from the server. Additionally, as soon as one task completes the query is automatically and immediately performed again (avoiding the need to keep running queries with an unnecessarily high poll rate).

Worker 6.1 is a major update to Worker 6.0 that:

· Increases the use of processing steps, by replacing the final copy/move/delete file step and the setting of clip fields on the Publish tab with processing steps, adding more flexibility and allowing the user interface to be simplified.

· Adds support for conditional processing by making each processing step conditional. Only those clips which meet a condition (those where a specified field matches a regular expression pattern) will be processed by that step, and if no clips match then that step will be skipped entirely. Taken together with server query triggered tasks and the Test File Existence processing step this allows a great deal of flexibility when developing worker scripts.

· Adds a new option to resume the worker after a few minutes if a server error occurs (normally unexpected server errors cause the worker to pause, allowing you time to resolve the problem manually, but you may want to select this option for unattended processing).

· Adds support for limiting the number of files that will be processed at a time in one quick folder scan or bulk server query step.

· Adds support for camera card manifests to ensure that a complete camera card is copied or archived when dealing with complex clips (P2, XDCAM, XF, XAVC, AVCHD, etc.). Additionally, the media path for metaclips is shortened and made more consistent so it doesn’t include unnecessary path components like BPAV/CLPR or CONTENTS.

· Allows array syntax to pull out parts of a file name in variable expressions.

· Supports rotation of worker log files (by default a new log file is created once per day or when the log file gets over 40MB in size, or when you reinitialise the worker, and the five most recent files are kept).

· Add support for importing and transcoding DPX and EXR image sequences.

· Add support for transcoding from any supported media format to any other via intermediate image sequences (for example, from RED or AVCHD directly to QuickTime ProRes).

· Improvements to file preprocessing, with explicit control over whether to copy then delete files to move them across file systems, and options on how to deal with error conditions. (Use the copy/move directory command to cope with complex media types and the regular copy/move file command for normal files.)

Worker 6.0 adds the following main features:

· The main change architecturally is that Worker 6 has been updated to work the same way as CatDV 11. The application now runs 64-bit for improved performance and memory handling and uses the new native helper process for import and transcoding of files (instead of the older QuickTime for Java technology). It also comes bundled with Java. These changes ensure compatibility with newer system software such as Mac OS X Yosemite.

· Worker 6 provides access to the new Native QT and FFmpeg transcoders of CatDV 11 (replacing the old 32-bit Xuggle, DSJ, and QTJava technologies which are now obsolete)

· There is a new CatDV XML v2 file format for importing and exporting clips which provides full access to the CatDV data model, including sequences, metaclips, event markers, metadata fields, thumbnails, and more. It is possible to update selected fields of existing clips via XML, as well as inserting new clips (both online and offline), and to automatically delete existing clips if required. The old CatDV XML batch file format continues to be supported.

· The ‘Conversions’ tab has a new panel where any number of processing steps can be added, replacing the fixed command execution and XML export fields. This provides a lot of additional flexibility and allows related files to be copied or moved, multiple commands to be executed, etc. Movie Conversions (proxy generation, and exporting stills and standalone movies) use this mechanism too, allowing conversions and other types of processing steps to be intermingled as required. Existing Worker 5 scripts are automatically converted to use the new processing steps (and a backup copy of the old worker script is kept).

· Similarly, there is a new batch export panel to export all the clips returned by a server query in different formats including AAF, ALE, EDL, CDV catalogs, and FCP7, FCPX or CatDV XML formats, and also to execute an external command on the exported clips.

· Improvements to Quick Folder Scan and the ability to test whether files original media or proxy files exist make it easier to keep catalogs and disk folders in sync and perform conditional actions if certain files are present or missing.

· There is a new ‘scheduled job’ trigger type for performing actions such as a database backup at specified times. The user interface for defining watch actions has been updated to expand the description of different trigger types.

· Metadata extraction rules can be applied to automatically populate metadata fields based on file naming conventions when importing a media file.

· The task list viewer in the main window has various options to filter the tasks based on their status or by searching for text in the file path or task summary.

· It is possible to hide watch actions which are no longer being used but which you don’t want to delete yet.

· ‘Allow polling’ has been changed to ‘remember completed tasks’ and the sense of this flag has been inverted. Wherever possible you should write watch actions so they remove the trigger condition once the task has been completed (change the status of a clip so it isn’t returned by the server query again, move a file out of the watch folder once it has been processed, etc.) and you don’t rely on the worker remembering completed tasks as this is much more efficient.

· It is possible to import and export individual watch actions and conversion presets, making it easier for systems integrators to provide and install customised scripts for particular tasks. These are saved as an XML file with extension .catdv which can be dragged into the worker node window to install it.

· There is a new job description field for adding optional notes about what a watch action does, and an optional worker name field to help identify which worker is which if you have multiple instances.

· There is a new field chooser when building server queries and setting metadata fields which allows you to type in the id of an arbitrary “blue” metadata field that might not appear in the list, eg. @ExifVersion.

· There is a new wizard to help you configure the destination path for a file export or move operation, including a regular expression previewer that will evaluate and display the value of an expression as you make changes.

· There is a new wizard to help with configuring command lines, including the ability to pick files for each individual argument and built-in templates for common actions such as Episode transcoding or MySQL backups.

· There is a new ‘Dump Variables’ processing step to help with debugging worker scripts.

CatDV Worker 5.1 builds on Worker 5.0 and provides additional flexibilty in terms of the types of work action that are supported, in particular for dealing with large numbers of clips in one operation:

· If you don’t need to perform slow operations like transcoding or thumbnail extraction on each file it is now possible to do batch operations such as a bulk server query and update or importing an entire folder of files in one operation

· A new ‘re-analyse media’ action allows basic stub clips to be created in the database first and then the detailed media file analysis and thumbnail extraction to be performed later (either once the file is available, or based on a server query)

· Ability to batch import clips from a CatDV XML Batch File even if the media files are not available, providing an easy way for external systems to insert clips into the database

· Other new features such as RED metaclips, automatic calculation of MD5 checksums, support for preserving P2 and XDCAM folder structures when copying and moving files, and improved handling of Unicode characters have also been added since Worker 5.0. See the Release History below for a detailed list of changes.

The following features were added in CatDV Worker 5.0:

· New framework for working with additional media libraries (such as JMF or Xuggle, rather than just QuickTime) for both importing and transcoding media files

· Improved QuickTime exporter and rewritten settings dialog, including ability to set QuickTime annotations, burn in text using complex variable expressions, override the reel name when adding a QT timecode track, better control over specifying the frame size, and set the background colour when burning in text or timecode.

· New Xuggle exporter, giving access to additional import and export formats, including the ability to deinterlace the video by line doubling and burn in text or timecode

· New DSJ exporter (Windows only) giving access to Windows Media and DirectShow codecs. (Note: on Windows 2008 Server it may be necessary to enable the “Desktop Experience” feature via Manage Computer to provide access to the DirectShow/Windows Media libraries.)

· Update the processing engine from CatDV 8 to CatDV 9/10 code base, so that changes made since CatDV 8.1.12 are now included (and ensure they continue to stay up to date in future). This includes changes such as being able to select the Xuggle or JMF importers (if installed), support for reading IPTC metadata, and improved metaclip support.

· Ability to run the task processor in a separate process, protecting against the possibility of crashes on particular media files. (Note that running in a separate process is not supported under Windows XP and requires Vista or later.)

· Support for exporting all the clips that match a server query to HTML or XML as a single batch, rather than separately for each clip.

· Simplify the worker by removing the ability to define more than one work set.

· Instead, allow for multiple worker processes to run concurrently for increased throughput (note that each worker will require its own license). Tasks can be assigned a timeout value and will be terminated after that time or if the external processor stops responding.

· Allow scheduling of tasks so they only run on certain days of the week or certain times of the day. This affects both when new jobs are created (via a watch folder or by running a server query) and when previously queued jobs are permitted to run.

· Incorporate the task list in the main window

· Remove the “pending” task state. Clips are published to the server immediately rather than being batched up. Additionally, appending to an existing catalog is more efficient as the worker no longer opens the catalog by loading existing clips from the server simply in order to append to the catalog.

· More logical arrangement of the user interface for defining a watch action, with changes to the post-processing and trigger tabs

· Increased number of extra fields that can be set on a clip

· The Windows version now comes with an .exe installer

· Ability to restrict watch folder actions to known file types such as common media files or P2 folder structures, based on the file name and extension

· New development mode to speed up testing by reducing poll and settle periods to 10s and prompting for confirmation before starting each task

· New “Slate” look and feel (this can be turned off if necessary on the General config page)

· The worker.xml file and default workset location (though you can change the latter if required) have moved from your home directory to the application data directory. After migrating to Worker 5 you can remove the old worker files to avoid cluttering your home directory.

These are in addition to the following features which were added in Worker 4.0:

· Support for unlimited server query terms

· Improved management of watch definitions, including a new ‘test’ button for watch folders and server queries and the ability to ‘solo’ individual actions by shift-clicking the Enabled button

· Support for media path mapping

· Improved handling of jobs submitted via XML batch files

· New options to use time of day timecode, add watermarks, and perform two-pass transcoding to add a second audio track with different settings

· Support for loading user-defined field names from the server

· Ability to specify separate emails for success and failure notifications

· Ability to perform processing on completion of an xml or server triggered batch

· Support for regular expressions to modify variable values

· A major new command line interface to generate reports or perform updates on the database for use within external scripts.

Detailed Version History

For detailed release notes please see the separate WorkerReadMe.html document.

Overview

The CatDV Worker Node is an automated version of the media processing engine found in the CatDV Pro application. It has two main components, the file watcher that watches one or more watch folders looking for new files to process, and the worker, a background thread which waits for and then processes the next available item from a queue of tasks.

The worker node must be configured to define which watch folders it monitors, what operation(s) to perform on these files, and how to access the CatDV server.

To configure the worker you specify:

· common definitions, such as the host name of the CatDV Workgroup or Enterprise Server, or details of an SMTP server for sending email notifications

· the location of the task queue file that the file watchers will add items to and the worker thread will remove items from as it processes them

· a definition of how items are added to the queue, ie. which folder to watch and any filter conditions that the file must fulfil to be processed (such as its filename matching a particular pattern, or it containing media in a particular format)

· a definition of what operations to apply to matching files.

Supported operations that can be performed include the following:

· analyse the file to extract technical metadata such as the video and audio format, sample rate, timecode etc.

· perform automatic scene detection to create separate clips for each scene

· create a CatDV preview movie from the media, or convert it to another format using an arbitrary QuickTime exporter and codec

· publish the clips (ie. details of the file and any scenes that were detected) to the CatDV server

· generate or update an HTML index page listing the processed clips

· send an email notification to selected users notifying them of the new file(s)

· execute an arbitrary operating system command, such as uploading the files to an FTP server, running a shell script or batch file, or anything else.

· move or delete the original media file once it has been processed or converted

The combination of a watch folder, filter condition and job definition is known as a “watch action”. You can define as many watch actions as you want, all with different filter conditions, to automatically process different kinds of file in different ways.

The combination of a task queue file plus a set of watch actions that will cause items to be added to the queue is known as a “work set”. The work set represents a queue of tasks to be performed (or that have already been performed recently).

Quick Start Guide

This section provides a quick tutorial, covering the basic steps you need to go through to start using the worker node:

1. Launch the CatDV Worker Node application. It should automatically bring up the Edit Configuration window, otherwise press the Edit Config button.

2. Go to the “License” tab and enter your registration code. (To do this, select and copy the entire contents of the email message you were sent then simply press the Paste button.)

3. If you use the CatDV Workgroup or Enterprise server, enter the server hostname and other details under the “CatDV Server” tab. (If you use the Enterprise edition of the server you should create a special user for the worker node to use and enter the username here.)

4. If you want to send email notifications you need to enter details of the SMTP mail server you wish to use under the “Email Setup” tab.

5. Go to the “Watch Actions” tab and click the “+” button to define a new watch action.

6. First, define what files the action applies to. It can be triggered by a file occurring inside a particular watch folder or by dropping a file into the Worker Node application window, and it may be restricted so it only applies to files that meet certain filter conditions. For example, you could enter “*.mov” as a filename pattern, or “44.1” in the audio format field. (Alternatively, you can define a server query and clips that have been saved to the server will be processed instead, for example whose status is “Publish via FTP”.)

If you don’t want to check a particular condition just leave the fields you’re not interested in blank to indicate “don’t care”.

7. Then, define what actions you want to apply to the matching files. Enter a job name to describe the action (eg. “Convert audio to 48 kHz”) and complete other fields under Job Details and Post-Processing as required. Many of the operations correspond to commands in the CatDV application. Analysing media corresponds to importing a media file and generating clips, movie conversions correspond to the Export As Movie and Build Preview Movies commands, and so on.

8. Press the “+” button on the Job Details tab to define as many movie conversions as you want. A single input file might be processed to create multiple versions at different resolutions for example. For the format, choose New Conversion Preset, give it a descriptive name then select which exporter to use and press Customise to adjust its settings. You can also choose a preset you have previously defined.

9. Repeat steps 6 through 9 as required to define as many different watch actions as required, or use the “Duplicate” button to use an existing watch action as a template and then edit the copy.

10. When you are finished, press Apply to re-initialise the worker node with the new settings and start processing files.

If you specified one or more watch folders they will be scanned initially after a short delay, and then repeatedly after the period that you configured. Alternatively, if you enabled file dropping, then you can drag and drop files from the Mac OS X Finder or Windows File Explorer into the main Task Queue area to submit them for processing.

User Interface

When you launch the worker node application it displays its main window, which summarises the current status of the worker and has commands to let you edit the configuration, display the detailed status of a particular task, and view the log file.

On the Mac the worker node continues to run in the background even when the main window is closed. Under Windows it terminates when you close the window.

Three distinct processes run concurrently and independently in the worker node, the File Watcher (which periodically scans the watch folder(s) looking for new files), the Server Watcher (which performs queries against the server looking for clips that are flagged for processing), and the Worker itself (which fetches tasks from the task list and performs them). The main window has check boxes which you can use to enable or disable these processes and also displays what they are currently doing.

Task Queue

At the heart of the worker node operation is the task list, a combined queue of tasks waiting to be performed and list of tasks that have already completed. Each task represents a clip or file that needs to be processed and a job to be applied to it.

The main window shows your current tasks in a table, with one task per row, whether completed or queued waiting to run. Each task has details of the input file and the job to be applied to it, together with information such as when the status was last updated, when the file was last seen (for example, if it the file is on a removable or network volume and is currently offline), or whether the task entry was created by the current running instance of the worker node or not (either because the software has been restarted or because another instance on another machine is using the same work file).

A task can be in one of several different states:

Queued When a new file appears in one of the watch folders a new task is created and queued. When the worker runs it will start executing the first available task whose status is ‘queued’.

Running This means the task is currently being executed. Note that several worker nodes on different machines could be working on the same task list, so it’s not necessarily being executed by the instance on this machine. (In practice though, if the instance is not the current one the most likely explanation is that an earlier instance was terminated prematurely and the worker node software has been restarted.)

Failed The task has completed but an error occurred. See the Information field for further details. If you correct the problem (eg. by starting the workgroup server, if it was previously down) you can resubmit the task so it is performed again.

Complete The task has successfully completed

Hold If you want to temporarily defer tasks (for example, to allow tasks that were queued later to be executed first) you can mark them as being on hold.

Offline This status is similar to tasks being on Hold. Tasks that were previously queued are automatically set to Offline when the worker node starts up if the file they relate to is no longer available (for example, because a server volume is temporarily offline).

Skipped Some jobs (such as importing an image sequence or a P2 metaclip) process multiple files in one go. If a file was queued for processing but has already been processed by another job it is marked as Skipped.

Retry The task has failed due to a transient error such as a network resource being unavailable and stays in the queue to retry after a progressively increasing delay. This state is entered via Javascript.

You can resubmit failed tasks (for example, after restarting the CatDV server), hold or resume tasks, or delete tasks using the relevant buttons at the bottom of the task list.

Tasks have a priority which affect the order in which they are done. The job definition defines a default priority but you can adjust the priority of specific tasks in the queue if you want to bring one forward.

Tasks are normally hidden after 3 days (unless you check the Show All box) and deleted altogether after 14 days.

Configuring the Worker Node

When you first launch the worker node software a message will be displayed because you don’t have a valid configuration. Press the Edit Config button to edit the settings. You will be taken through various screens and tabs for the different parts of the configuration:

General settings

Under the General tab you can choose your preferred format for displaying dates and times. You also specify how catalogs which are auto published to the server are named.

You need to enter a valid worker node license by entering the user name and activation code under the License tab. The easiest way to do this is select the email message you were sent (contact [email protected] if you don't have a copy), copy the entire text, then press the Paste License button.

Under the CatDV Server tab are settings that relate to the CatDV Workgroup or Enterprise Server. To automatically publish clips to the server you need to enter the server name (and optional port number) in the Server URL field.

If you are using the CatDV Enterprise Server enter the user name, password and group name (available from the CatDV Server Admin panel) that you want to own any catalogs that are automatically published by the worker node.

So that the correct names for user-defined fields are shown when defining server queries or settings clip fields, if you are using the Enterprise server you can enter the name of the field definition set you are using and press the Connect (or Re-Connect) button to load those field names from the server.

Publish Interval defines how long to wait before a clip is published to the server. Using this setting allows several files to be batched up and published in one go, which is more efficient but introduces some latency before other machines on the network will see the files.

To send email notifications whenever a file has been processed you need to have access to an SMTP server that will accept messages sent from the worker node. Under the Email Setup tab enter the server hostname, the From address that should appear in the message and a default subject (you can specify different subjects in different watch actions). You can also enter a user name and password if the server requires authentication.

In the same way as publishing clips to the server can be batched, email notifications are also batched so no more than one message is sent in a given period. You specify the recipients for normal notifications as part of each specific watch action definition, but if an error occurs (for example, an error connecting to the CatDV server) you can enter the email address of the administrator user(s) to be notifed.

The configuration needs at least one work set definition to be valid. You define work sets and watch actions under the Watch Actions tab.

Creating work sets and watch actions

In the current version of the worker you can only have a single work set. The work set includes the name of a task list file. This file is important as it contains the queue of tasks, both completed and currently running, and other information. In most cases you can use the default task list file but if necessary you can specify a different task location by clicking on the name to display a file chooser. The file name you choose should have extension .xml.

If you will run the worker node under your user account a good place for the task list file is in your own home directory, as you will need read and write access to both the file and the directory that contains it.

You can define any number of watch actions in your work set, each of which defines a watch folder and tasks to be performed on any matching file that appears in the watch folder. Press the ‘+’ button to add a new watch action or use ‘Duplicate’ to copy an existing watch action (you can then edit the copy without having to enter common settings again).

Editing watch actions

A watch action has two distinct parts. A definition of which clips or files should be processed (based on conditions such as where they are or what type they are) and a definition of what operation(s) should be applied to those files.

An action can be triggered based either on files appearing in a watch folder (or being dragged onto the worker icon), or based on clips saved to the CatDV server that match particular query conditions. If an action is triggered by the appearance of a file it must first be imported using one of CatDV’s importers (for example, analysing the file using QuickTime to extract metadata such as the file format, duration, timecode, etc.) whereas for a server-triggered action the clips have already been imported (either from a CatDV client or perhaps by another worker task) and so the file pre-processing and import stage is omitted.

The ‘General’ tab defines the name of the job, its relative priority, whether to send an email notification on completion of the job, and whether the job is triggered by a file or by a server query.

For file-triggered tasks, the ‘Trigger’ and ‘Conditions’ tab define the files to be processed, while the ‘Pre-processing’, ‘Conversions’ and ‘Post-Processing’ tabs define the actions that will be performed. For server-triggered tasks a ‘Server Query’ tab defines the query used to select clips for processing, while the ‘Conversions’ and ‘Post-Processing’ tabs are as before.

File-triggered tasks

The ‘Trigger’ tab defines whether files are processed because they appear in a watch folder or when they are drag and dropped. The ‘Conditions’ tab defines various filter conditions that the file must match for this watch action to be performed. Finally, the ‘Job Details’ and ‘Post-Processing’ To use watch folders the root watch folder must be specified. You can choose whether to only analyse files that appear in the watch folder or recursively in any subdirectory.

Alternatively, you can also enable drag and dropping of files directly onto the worker node application window. You must enable either the watch folder or dropping files (or both) or the watch action won’t be active (though there may be situations where it’s useful to disable a watch folder so it isn’t scanned without having to delete the entire watch action definition).

To avoid processing a file while it is still being captured or copied a settle period can be defined. Files are ignored if they are less than a given number of seconds old. The check interval defines how often the watch folder is scanned. Increase this period if you are recursively large directories on a slow external volume. (These fields can be left blank to use their default values).

Watch conditions

If you want to limit the processing to files of a particular type you can do so by setting various filter conditions.

The simplest and most efficient test is to only include files whose name matches a particular pattern, or which does not match the ‘exclude’ pattern. The filters can either be simple patterns, such as ‘*.mov’ or a complex regular expression such as ‘/[A-Za-z]+\.mp4$’ if the regex box is checked. Case is normally ignored when matching these patterns.

Other tests involve analysing the file by trying to open it as a media file (using QuickTime). You can choose one of various basic types (eg. QuickTime movie, AVI movie, MPEG movie, Still image, Non media file) from the drop down, or for media files add additional criteria based on the movie duration in seconds, the audio and video format, or testing for arbitrary track types.

In each case, you can check for the presence or absence of a particular property, and if you leave a field blank the condition will not be checked.

For video and audio tracks a description of the format (like the Video and Audio fields of CatDV) is checked for the presence of the text you type in. For example, Video might say “Photo – JPEG (480x320 30.0fps)” while the Audio description might be “MPEG Layer-3 Audio (44.1 kHz, stereo, 16 bit). Any substring will match, so you could type “JPEG” or “30.0fps” or “Photo – JPEG (480x320” in a condition.

To test for the presence of arbitrary track types, enter the type and subtype codes as displayed in the QT Tracks field of CatDV.

Pre-processing

You can pre-process files by moving or renaming them (including changing the file extension or Mac file type/creator) prior to importing or converting the files, for example to give files a unique name early on in the process if they would otherwise be known as something generic like C0001.MXF or IMG0001.JPG. It can also be useful when dealing with long filenames or those containing accented characters, which can cause problems in QuickTime under Windows.

There are several renaming options available. The file can be named by concatenating the folder and file name, by using a timestamp based on the current date and time, or by moving files to a destination folder and numbering the files consecutively so they get a unique name that doesn’t clash with files already there.

The naming drop down is used to specify both the destination folder (when moving files) and the file name. Some combinations are not permitted, for example “Rename file” and “Use enclosing folder”, as the latter is used when moving a file to another folder but keeping the original filename, and the former is used when renaming a file but leaving it in its original folder. See “How file naming works” below for further details.

The destination field is used both to specify the destination folder (when copying or moving a file) and to enter the filename pattern when expanding variables. These can be combined, for example you could specify a destination of “/MediaArchive/$Q_$1_$2$e” and then pass in a file called “/Volumes/XYZ/A001/A001_B02_1234_HI.MOV” to give “/MediaArchive /XYZ_A001_B02.MOV”

Most pre-processing operations refer to a single file but when dealing with things like P2 volumes you can copy or move the entire enclosing folder structure when the action is triggered. You need to specify a root folder (see below) and it’s the top level folder within the root that will be moved. For example, if the action is triggered by a file “/Folder/Subfolder/File1.mov” within “/Root” then /Root/Folder (and all its contents) will be copied or moved.

Importing media files

After pre-processing, the file needs to be “imported” to extract technical metadata (including its duration, format, timecode, and the tape name, if present in a timecode track) and create a clip record before you can transcode it to another format or publish it to the server. Optionally, scene detection is performed, using either DV-based start/stop recording information or visiual scene analysis, to create separate subclips. A separate thumbnail image for each clip is created of the specified size.

The following importers are available:

AVI/WAV File This uses CatDV’s built in parser for AVI and WAV files

Avid AAF File Import one or more clips from an Advanced Authoring Format file

Avid AVB File Perform a one-off import of the contents of an Avid Bin file

Avid AVB Folder Sync Process bin files whenever they change and check for existing clips on the server based on UMID, then either update the existing clip or import as a new clip.

CatDV Catalog (Quick) Open a .cdv catalog file, with the option to replace an existing catalog on the server in its entirety (may be used to sync catalogs from one machine to another)

CatDV XML File Import a CatDV v1 or v2 XML file

FFmpeg Media Import arbitrary video, still or audio media files using FFmpeg

Final Cut XML File Import contents of an FCP7 or FCPX XML file

Folder Clip Import a subdirectory of the watch folder as a generic directory clip without processing its contents.

Generic File This imports a file as a non-media file with a generic icon and no analysis of the media format (such as duration)

Image Sequence If a folder contains consecutively numbered still images the worker can import these as a single image sequence clip.

Java Image Import still images using Java ImageIO and TwelveMonkeys library

JQT MOV/MP4 This uses CatDV’s built in parser for MOV and MP4 files

MPEG Media This uses CatDV’s built in MPEG parser to determine technical metadata for MPEG-1 and MPEG-2 program and transport streams

MXF File This uses CatDV’s built in MXF parser

P2 Metaclip This uses CatDV’s built-in MXF parser and processes all the related files for a P2 clip in one go (video, audio and clip xml details are all stored in separate files but the P2 importer creates a single ‘metaclip’ that represents the clip as a whole)

PDF Document This uses CatDV’s built-in PDF parser

R3D File This uses CatDV’s built-in parser to read metadata from a single RED .r3d file

RED Metaclip Import a RED clip, creating a single metaclip that includes multiple spanned .r3d files and associated files such as reference movies in the same folder

TIFF/RAW Image Import still camera raw files (eg. CR2, DNG, NEF, MOS etc.) and TIFF images

WMV/ASF File This uses CatDV’s built-in parser for Windows Media files.

XDCAM Metaclip Import XDCAM, XDCAM EX and XDCAM HD folder structures and create a single ‘metaclip’ that wraps up all the files associated with a clip

In most cases you can use the “Automatic” setting to automatically determine the correct importer to use for common media file types.

If you choose the “Import all files” option then other types of file that the worker doesn’t know about are imported as generic files. Additional, if a known file type is corrupt and importing it normally gives an error, the worker will fall back to importing it as a generic file.

If you choose the “Interpret batch files” option then the contents of known batch and log files (with extension .cmx, .edl, .ale, .txt, .tsv, .xml, .srt, .tab, .dmp, and .fcpxml) are parsed using the corresponding CatDV importer (eg. the CatDV Pro “Import As Tab-Separated Text” command). This can result in many clips being produced (one per entry in the batch file), including offline clips.

If you choose the create MXF Metaclips “Based on folder structure” or “Based on MXF UMID” option then Avid, P2, XDCAM and similar formats where one clip can consist of separate files will result in one “metaclip” rather than separate individual clips in your catalog.

To create a clip per file you should therefore check the “Import all files” option, uncheck “Interpret batch files” and choose the “Don’t create metaclips” option.

In most cases you should choose “Based on folder structure” rather than “Based on MXF UMID” as this is more efficient, and gives more consistent results when running with multiple worker processes. You should only choose the MXF UMID option if you are working with Avid MXF files or need to merge spanned P2 clips into a single metaclip.

Automatically keeping catalogs in sync with a disk or folder

A common use of the worker is to automatically catalog an entire volume or folder and keep the catalog up to date as new files are added. To do this efficiently and provide more flexibility and control over the process this is normally done by breaking it down into a number of related tasks:

i) Use Quick Folder Scan to quickly scan the volume and create generic stub clips for each file, permitting files to be accessed or archived via CatDV as soon as they appear on disk. Set a clip status field to ‘Awaiting analysis’.

ii) Perform a server query to search for files of status ‘Awaiting analysis’ and perform media analysis on these to extract thumbnails and technical metadata. This will add each file to the task queue for separate processing but can run as a low priority background task. On completion, update the status to ‘Analysed’.

iii) Use another server query task to transcode the files and create proxy versions as required, either automatically (perhaps all video clips) or manually (based on the user selecting the clips they want proxies for within CatDV and ticking a checkbox to say ‘Create proxy’). On completion set the status to say ‘Proxy created’.

iv) Finally, you can have a bulk server query that tests whether all the files listed in the catalog are still available online and/or whether proxy files exist, and can update other clip fields to say ‘Original file exists’ and ‘Proxy file exists’.

v) To detect when files have been deleted and then remove the corresponding clip use the ‘Detect file deletes’ quick folder scan option (see below).

These steps can be combined if required, for example combining ii) and iii) to create proxies at the same time as the files are analysed.

Unless the volume or folder being monitored is very small you may want to place clips into different catalogs based on the folder hierarchy. For example, if you have a watch folder on /Volumes/SAN and files are organised into a hiearchy based on customer name and project number then you might want to create a separate catalog for each project. The $P variable contains the path of each file relative to the watch folder root but as the project may contain subfolders we want to use the first two components of $P as the catalog name. We can do this using regular expressions, first to convert Windows paths to Unix-style paths if necessary then pull out the first two groups of characters between a forward slash. /[^/]+/ matches a / followed by one or more non-/ characters followed by another /. The Quick Folder Scan action might therefore publish to catalog name:

SAN$P{s,\\,/,g}{s,(^/[^/]+/[^/]+)/.*,$1}

Even easier, the following will do the same thing, as Windows backslashes are now automatic converted to forward slashes in a catalog name:

SAN/$P[1..2]

When checking whether clips are still online at step iv) we can search for clips where the catalog name starts with “SAN”.

(You might also want further statuses such as ‘Ready for logging’ and ‘Logged’ and move clips or files to another catalog or library location once they are logged, but this depends on your desired workflow.)

Publish and sync options

When scanning a watch folder and publishing changes to the server the following options are available. Some are only available with a quick folder scan (which compares an entire folder with what’s on the server in one go, but without detailed media file analysis) or with a regular watch folder (which processes one file at a time, with full media analysis).

Check size & date

Normally folder scan just checks for an existing clip with the same file path but with this option if the file size or date has changed it is processed again as if it’s a new file. (With a regular watch folder action the file is always processed again if the file size changes.)

Open early

Open the target catalog early, before ingesting the file, so we can test for existing clips with the same path and skip the import if it’s a duplicate. This doesn’t work if the catalog contains a variable expression that depends on the clip.

Avoid duplicates

Skip the file if it already exists in the target catalog, based on the file path. Requires the ‘open early’ option. (This is an older mechanism and has been superseded by quick folder sync and detecting file moves if more advanced workflows are required.)

Update existing

Similar to ‘avoid duplicates’ this looks for an existing clip in the target catalog and if found does a reanalysis to update technicate metadata on the existing clip.

Detect file moves

Rather than just checking for an existing clip with the same file path, when a new file is detected the worker calculates a file hash and checks for an existing clip with the same hash. If that clip is now offline, and if the filename is the same but in a different directory, or the directory is the same but the name has changed, this indicates that the file has been moved or renamed and the existing clip is updated

Move proxies

If a file move has been detected and the media path of an existing clip is updated then move or rename any corresponding proxies in the same way.

Update catalog

If the catalog we are publishing to contains variables (typically based on the file path because we are mirroring the catalog structure based on the directory structure) then if we detect a moved file and have updated the media path then use this option to also move the clip to the corresponding new catalog.

Detect file deletes

Before the initial file scan takes place the worker gets a list of all the files within the watch folder that the server knows about. If any of those files are now missing the corresponding clips are moved to a special ‘Deleted Files’ catalog.

Widen search

To protect against treating a file that has been archived and purged detection of deleted files doesn’t apply to clips with a blank archive status. And when detecting file moves normally only candidates within the same production group and root watch folder are considered. The widen search option relaxes these checks.

Any catalog

When doing a folder scan or detecting moved files check with any catalog on the server (in the current production group) not just the specifed catalog.

If both ‘Any catalog’ and ‘Update catalog’ are selected, and if you are maintaining a catalog structure to mirror the top few levels of the folder structure, then if a clip is moved to the wrong catalog (or if the catalog structure changes) then clips will be moved to the correct catalog. (This doesn’t happen immediately because the folder scan is triggered by changes on disk, so after changing the catalog structure you should restart the worker.)

Replace catalog

When importing CDV catalog files and publishing them to the server this will replace an existing catalog with the same name

CatDV XML batch files (v1)

You can submit a batch of media files to the CatDV Worker for processing by providing a list of file names (optionally with metadata) in the CatDV XML file format. You can create an example XML file in the format the worker expects by exporting clips from CatDV using File > Export As > CatDV XML Batch File, though not all the fields in that file are used when you reimport a CatDV XML file. The main field you need to specify is the MEDIAPATH of the file to import but you can also specify additional metadata, for example:

<CLIPS>

<CLIP>

<MEDIAPATH>/Volumes/Media/Folder/File1.mov</MEDIAPATH>

</CLIP>

<CLIP>

<NAME>My clip name</NAME>

<MEDIAPATH>/Volumes/Media/File2.mp4</MEDIAPATH>

<NOTES>This is a clip with metadata</NOTES>

<USER1>User field one</USER1>

<TAPE>Tape001</TAPE>

<BIN>Bin name</TAPE>

</CLIP>

</CLIPS>

There are two ways to import a CatDV XML file, in either “batch” or “immediate” mode. For further details, see the sections on Batch Operation and Offline Workflows below.

If you are submitting a batch of files to the worker for processing, possibly involving a transcode of each file, then you need to create a CatDV XML batch file (version 1) and import it in “batch” mode to create a separate worker task for each file. If you are importing a set of clips in one go (or updating existing clips) then you should use the new CatDV XML file format version 2.

New CatDV XML files (v2)

While version 1 XML batch files will continue to be supported, the new CatDV version 2 XML file format is more modern and more flexible and properly supports sequences, subclips, markers, metaclips, thumbnails, and more.

To import a clip by analysing the media file but specifying certain metadata fields you can write:

<catdv version=’2.0’>

<options analyseMedia=’true’ updateOrAdd=’add’ />

<clip>

<name>My clip name</name>

<notes>This is a clip with metadata</notes>

<tape>Tape001</tape>

<bin>Bin name</bin>

<metadata>

<field index=’1’ name=’User 1’>User field 1</field>

<field index=’23’ type=’multi-picklist’ name=’Weather’>

<value>Rain</value>

<value>Cloud</value>

</field>

</metadata>

<media>

<filePath>/Volumes/Media/File2.mp4</filePath>

<metadata>

<field key=’Author’>Joe Smith</field>

</metadata>

</media>

</clip>

</catdv>

You can use XML to delete an existing clip on the server:

<catdv>

<options updateOrAdd=’delete’ searchBy=’remoteID’/>

<clip>

<remoteID>623462</remoteID>

</clip>

</catdv>

The following example demonstrates creating a clip and a subclip referring to the same source media object, without performing media file analysis:

<catdv>

<clip>

<name>File.mov</name>

<type>Movie</type>

<notes>Master clip</notes>

<in seconds="0.0">0:00:00:00</in>

<out seconds="10.0">0:00:10:00</out>

<timecodeFormat>25.0</timecodeFormat>

<media id='0'>

<filePath>/Volumes/Media/Offline/File.mov</filePath>

<video>Apple ProRes 422 (HQ) (1920x1080 25.0fps)</video>

<audio>24-bit Integer (48.0 kHz, stereo, 16 bit)</audio>

</media>

</clip>

<clip>

<name>Subclip</name>

<type>Movie</type>

<in seconds="5.0">0:00:05:00</in>

<out seconds="7.0">0:00:07:00</out>

<media id='0'/>

</clip>

</catdv>

Notes on the new XML file format:

· Although none of the fields are mandatory when importing a clip, in order to create a useful clip (without relying on CatDV or the Worker analysing a media file) as a minimum you should ideally provide all the following: <name>, <type>, <timecodeFormat>, <in>, <out> (or <duration>), plus a <media> object with <filePath>, <video> and <audio> elements.

· The following timecode formats are supported: 1.0, 10.0, 15.0, 23.98, 24.0, 25.0, 29.97, 29.97 nd, 30.0, 50.0, 59.94, 59.94 nd, 60.0, 100.0.

· <clip> and <media> (and <importSource>) elements can include an id attribute, which allows multiple subclips that refer to the same source media object to be represented. It also allows sequences to refer to their source clips. (For <media> and <importSource> the id is an integer, while <clip> can use any unique string.)

· There is a consistent syntax for user-defined and media metadata fields, paving the way for general metadata fields in CatDV Server 7

· Multi-grouping fields take multiple values by providing multiple <value> elements rather than using a new line delimiter.

· Markers have separate elements for <name>, <category> and <description>, which allows for additional custom marker columns to be added when using CatDV Server 7.

· Time code values and timestamps (and also file sizes, data rates, and clip types) are stored both as an internal representation and a user-friendly formatted representation. For example, <in seconds=’1.5’>0:00:01;15</in>, <modified timestamp="1420560808038">Jan 6, 2015 16:13:28</modified>, <fileSize bytes="1116192000">1.04 GB</fileSize>, etc. On export both representations are used but during import the internal representation is preferred.

· Thumbnails can be embedded in the XML file, stored as base64 encoded JPEG data (which must not exceed 64KB per thumbnail). This allows a complete clip representation including thumbnails to be imported into CatDV via XML, even if the media file is offline.

· For examples of other features such as as <sequence>, <metaclip>, and <importSource> elements please export a version 2 XML file from CatDV or the Worker. A DTD can be provided on request.

When importing an XML file you can provide an <options> element to control how the XML file is interpreted on import and whether to update existing clips or add new ones:

· You can control whether to analyse the media file on import (to determine the clip duration, technical metadata, extract metadata from the file name or a sidecar file) using <options analyseMedia=’true’/>. By default, media files are not analysed and all the technical and other metadata is specified in the XML file.

· You can specify whether to update existing clips stored on the server or insert new clips using an element such as <options updateOrAdd=’updateOrAdd’ searchBy=’filePath’/>.

· Valid update modes are ‘add’ (this is the default and results in new clips being added even if they already exist), ‘addIfNew’ (add a clip if it doesn’t already exist, otherwise do nothing), ‘updateOrAdd’ (update an existing clip if it exists or add a new one), ‘updateOrIgnore’ (update an existing clip if it exists, otherwise do nothing) and ‘updateOrFail’ (update an existing clip if it is found, otherwise report an error).

· The special update mode ‘delete’ can be used to delete existing clips on the server.

· Existing clips can be searched for by ‘remoteID’ (the unique integer database id of a clip), ‘clipRef’ (the user-settable clip ID), ‘filePath’, ‘clipRefAndPath’, ‘catalogAndPath’, or ‘catalogAndClipRef’. Note that only remoteID is guaranteed to be absolutely unique so any of the other options could result in multiple clips being updated (or deleted).

· Normally updating or adding clips always applies to the server, both when the XML file is processed by the Worker or by CatDV/Pegasus desktop clients. Use <options updateLocal=’true’/> to update clips in the currently open catalog in memory in the desktop client.

· If you update an existing clip via XML only those fields which are specified in the XML file are updated and other fields keep their original values. You can update user defined and media metadata fields, standard clip fields such as name, notes, status, tape, bin, record date, and rating, markers, plus the media path and archive status, via XML. With care you can also update the clip in and out points, type, poster, and clipRef. When updating markers, the markers you specify are normally added to any existing markers unless you specify <markers replace=’true’>…</markers>

· When updating clips you can choose whether to add new thumbnails or delete existing ones first and replace them by specifying <thumbnails replace=’true’>.

· When updating an existing clip you can specify how to combine the new value for certain elements (<notes>, <bigNotes>, and <field>) with the existing value by adding a merge attribute with values: ‘replace’ (overwrite the old value), ‘prepend’ (prepend the new value to an existing value), ‘append’ (append the new value), ‘default’ or ‘true’ (don’t overwrite an existing value but set it with new value if it is currently not set), and ‘merge’ or ‘combine’ (split the values into lines and merge the two sets of lines). For example <notes merge=’true’>Under review</notes>

· You can have several <options> elements, each of which will apply to all subsequent clips in that file until the next <options> element is encountered.

· When importing clips via XML the import source is normally set to describe the XML file the clips were imported from. It is possible to override this by providing an <importSource> element and specifying <options importSource=’true’/>.

Metadata extraction rules

Many workflows involve importing files that have been named according to strict naming conventions and where the file name or file path is structured to represent elements such as the series and episode of a show, the type of shot, the camera used, the scene and take number, and so on. While it is possible to use the Publish tab to set clip fields based on worker variables and regular expressions applied to the file path can be awkward when the file naming conventions become more complex.

A new and more powerful metadata extraction mechanism has been added which involves providing an external mapping file that lists patterns to look for and then which metadata fields to set when importing a file if those patterns are found in the file path.

You can specify a file containing metadata extraction rules on the Options tab of the worker config. The format of the file is lines of the form: pattern tab field id tab value to set newline. The pattern can either be a simple text pattern or a regular expression. Blank lines and comment lines starting with a semicolon are ignored.

If the pattern contains nothing but upper or lower case letters and numbers then it’s taken as a token that is looked for in the file name. Tokens in the file name must match case and be delimited by a space or punctuation character. For example, the file name “FB_S2_XYZ_Scene01.mov” would match FB and S2 but not s2 or XY or 01.

You might have the following metadata extraction rules and user field 1 would be set to “Football” and user field 2 to “Season 2” when the file above is imported.

FB U1 Football

BB U1 Basketball

S1 U2 Season 1

S2 U2 Season 2

If the pattern contains anything other than basic alphanumeric characters it is taken to be a regular expression, where certain symbols such as . + * ( ) [ ] ^ $ \ { } etc. have special meanings.

If the pattern starts with lower case ‘m’ or ‘n’ and followed by a punctuation character such as comma, semicolon or slash then it is taken to be a regular expression that applies to the entire file path or just the file name respectively, and the specified character is the delimiter at the end of the regular expression (as you might want to use something other than / when matching file paths). In either case, you can follow the regular expression with ‘i’ to ignore case. The value of the first subexpression within parentheses is available in $1, the second set of parentheses as $2 and so on, and $0 refers to the full pattern.

For example, you might have

n/Scene(\d+)/ U3 Scene number $1

This would look for a sequence of digits occurring after the text “Scene” anywhere in the file name, and if found store the scene number in user field 3.

You can use a more complex pattern like m,/([^/]+)/[^/]+$, to extract the parent folder into $1, using comma as the regex delimiter and where forward slash is the file path separator on Mac OS X and Unix. [^/]+ means any sequence of one or more characters which aren’t a slash and ‘$’ anchors the search to the end of the file path.

If you omit the pattern then you can have several substitutions triggered by the same regular expression, saving on evaluating the expression more than once. For example

m,^/Volumes/Media/([^/]+)/([^/]+)/, U2 $1

U9 $2

would store the first folder after /Volumes/Media in user 2 and the second folder in user 9.

You could use a similar mechanism to pre-populate a set of metadata fields such as customer name, contact details etc. based on which customer folder the file is in.

Detailing all the capabilities of regular expressions is beyond the scope of this document but if you require help in defining metadata extraction rules (or developing worker scripts more generally) then your systems integrator or our professional services team will be able to help.

To simplify working with a mix of Mac and Windows paths all file path separators are converted from backslashes to Unix-style forward slashes so regular expressions should only check for /, even on Windows. Also, you can define root names and match for these as well, so the pattern above could be written as

#root MEDIA /Volumes/Media

#root MEDIA \\server\media m,^MEDIA/([^/]+)/([^/]+)/, U2 $1

You can organise rules into separate files and import another file using

#include "/path/to/file.txt"

Metadata naming rules on the server

If you use Server 10 you can now use metadata extraction “naming rules” that are stored on the server and defined using the web interface. Each rules consists of one or more base paths (or root directories) and then a list of clip fields to be set from elements of the file paths or file name. You can use value lookups to map or expand elements (eg. P:Pilot, S1:Season 1, etc.).

When you enter the metadata rules file path you have the following options:

· Enter a path to rules stored in a file (as above)

· Leave the file path blank to load naming rules from the server (if present) and do nothing if they are not there

· Enter a ‘*’ to load naming rules from the server and report an error if they are not there

· Enter a ‘-’ to ignore naming rules on the server even if they are there

Exporting watch definitions

It is possible to export watch action definitions (and also conversion presets) by pressing the Export button. This will create an XML file with extension .catdv that can be dragged into another worker (either into the main window or into the watch action list in the configuration dialog) to install the watch action on that machine. If the watch action includes movie conversion steps the required conversion preset definitions are automatically included in the .catdv file.

When you install a watch definition you are prompted whether to replace an existing watch action with the same name or keep both and rename the old one.

You can parameterise .catdv settings files so that when a user installs them they are prompted to specify the values for certain parameters, such as the watch folder location, an FTP password, or YouTube account details, as these will differ from installation to installation.

To parameterise the file you should include text in the format {{Parameter name{Type information}:Default value}}. Everything between {{…}} will be replaced with the appropriate value entered by the user. For example,

<convert previewDir="{{CatDV proxy folder{DIR}:/Volumes/CatDVProxies}}" … >

or

<job … md5Checksums="{{Calculate MD5 checksum?{Yes=true,No=false}}}" … >

You can omit the type information and default value. Omit the type information for plain text fields, use {FILE} or {DIR} for a file or directory chooser, or use a comma-separated list {Option1,Option2,…} for a drop down pick list of values. If required these options can have a different display value from what is inserted into the final definition, for example with {Red=0xf00,Green=0x0f0,Blue=0x00f,Yellow=0xff0} the user could pick Green from the drop down list when installing the action but 0x0f0 would be inserted into the definition.

Server-triggered tasks

For server-triggered tasks you use the ‘Server Query’ tab to specify a query that will be performed to find matching clips for processing. You can also specify a check interval, and whether only to process clips which have a file which is currently online and accessible. If you specify a root folder, only files within that folder are processed, and that folder is used when calculating relative paths (see below).

Queries are defined in the same way as advanced queries in the CatDV client. If you specify multiple query terms (for example, based on clip status, on modification date, and so on) they must all be true for a clip to be processed unless you check the ‘OR’ box, in which case just one of the OR terms needs to match. Check the ‘NOT’ back to invert that condition (ie. match all clips for which the condition is not true).

Just as with watch folders you should take care that you don’t specify actions that will be performed in an endless loop. For example, if you have a server-triggered action based on clips whose status is “To be converted” you should update the status to something different such as “Completed” on successful completion in the post-processing stage. By default, the worker will only process each clip once per session but you can override this if required by checking the “allow polling” option.

Each clip that matches the server query will be added as a separate task into the task queue (as there could be a large number of files that each requires a lengthy transcode). If you perform an operation such as exporting an XML file on the Conversions tab it will therefore export one clip at a time – see the section below on ‘Batch operation’ if you want to export all the matching clips in one go.

Specifying a root folder